In this last post of my four-part series on microservices, I’ll look at some of the positive aspects of microservices, and how much simpler they can potentially make things once you overcome the up-front effort required to make them work.

Scalability

When a monolithic app needs to scale, how can that be achieved? Well, for example:

- More RAM (if the app is memory-bound)

- More or Faster CPUs (if the app is CPU-bound)

- More instances of the app (front with a load balancer)

These are all effective ways to scale the application. What if one function within the application could really use a performance boost, even though the others are working just fine? Using a load balancer to distribute work requests can mean that scaling up the ability for a single module to process concurrent requests can be as simple as spinning up a few more containers and sharing the load:

There’s some effort required to allow the main program to issue concurrent calls, but the benefits can be worthwhile. Plus, of course, each of our microservices may be called by other programs, or may call each other as necessary, so there may be more than just one source of activity. Conversely, if the function writes to a shared data source, the concurrency may generate other complications around data integrity, but these are the battles with any scalable solution.

Release Management

Release management really is the big win for an application with microservices. Given the caveat that the API must remain constant between releases (so that other microservices interact with the upgraded function in the same way, and receive the same result), microservices bring a huge potential benefit to code release which is that any function can be upgraded independently of the others. By way of an example, let’s look at the simple mathematical application I created in my last post, and we will redesign the multiply

function.

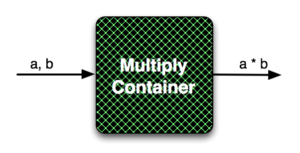

Here’s the current function which is implemented in a microservice style:

Feed the function two integers (a, b) and the function will return the product (a x b). What’s important is that any code issuing a call to this function need have no idea how the function works internally; there’s an API for the request and a standard JSON format for the results. Functionally the multiply microservice is a black box, so we can change anything we like inside the function. For sure, we need good unit test coverage so that we can be certain that the new code release works the same way as the last one, but beyond that we are free to implement the code however we want.

The current multiply function is implemented in PHP thus:

$answer = $val_a * $val_b;

However, a recent mathematical research has revealed that the same result can be achieved by adding a to itself b times. Amazing. Since this is a cutting edge application, I decide to refactor my code to use this breakthrough technique:

$answer = 0;

for ($loop = 0; $loop < $b; $loop++) {

$answer += $a;

}

I can run my tests and confirm that the function returns the same (correct) values as the legacy code. Happily, it’s only a small section of code and I’m an expert in multiplication, so it’s easy for me to test every function thoroughly and be confident that it will work.

In order to deploy my new version into production maybe I could approach this cautiously — there’s no need to jump in an swap everything out at the same time, so how about this instead:

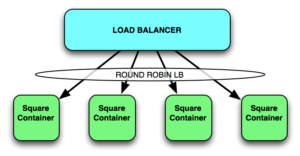

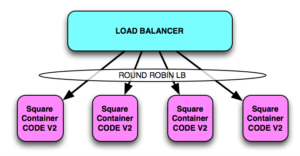

Pre-Change

Four multiply

containers are running behind a load balancer in a round-robin configuration.

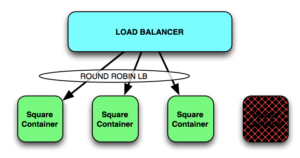

Change Phase 1

One container is taken out of rotation so it is no longer sent new sessions.

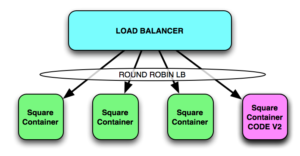

Change Phase 2

A new container is spun up using the amazing new code, and is added to the load balancer to take sessions.

At this point 25% of the service has been migrated to the new code. Since the API is identical, the rest of the code (e.g the main program) did not need to know that there had been a change.

At this point, the process could pause if needed so that KPIs can be monitored to ensure that there has been no negative impact to the overall service throughput or success. Once done, the other containers can be upgraded one by one using the same process.

Completion

The multiply service has now been fully upgraded.

Assuming that I did my job properly, this upgrade should have been seamless. In true Service Oriented Architecture (SOA) fashion, I should also note that it’s possible for this microservice to be used by more than one ‘main’ program (whether you want to do that is another question), so I could in theory have effectively upgraded the multiply function for two or more applications simultaneously. There is a whole side argument as to whether that’s a good or bad thing, but it’s easily sidestepped by having two parallel VIPs for the multiply service, initially both pointing to the same set of servers, but if we want to upgrade separately we simply leave the old containers serving the other application while we migrate to new containers for my squariply app.

Scary Things

The examples so far imply that all the services are co-located, but there’s no reason why they have to be, if the application as a whole can handle any latency incurred by doing otherwise. Even internal to a network one could operate geographical load balancing, anycast VIPs (with some TCP caution involved), or share containerized services between more than one cloud hosting company.

The use of containerized microservices has the interesting side effect of abstracting the service from the underlying architecture: if you can route to the VIP or service IP, it can take part in the larger application.

Are Microservices For You?

Microservices really are one of those things where it just depends.

Even assuming that you have a development and operations environment that embraces what we currently refer to as a DevOps

mentality, everything I list as a benefit of microservices has a complementary downside that could make them unpalatable for some. Thus without wishing to sit on the fence, the use of microservices is just another tool in the toolbox, and we should all remember that while theoretically, yes, you can use a hammer to insert both screws and nails, deep down you know that one of those is just wrong.

Whatever your feeling on microservices, if you tried to mirror my demo environment I hope you had fun. Continuing to learn is one of the the harder things to do in networking, but it’s also one of the most important. Experimenting with technologies like this (or even, preferably, in a better way than this) is a great way to expand horizons and keep motivated. If nothing else, I had fun, and I hope I conveyed some of that in this mini-series.

Leave a Reply