As part of Networking Field Day 4, Statseeker presented to the delegates on their network performance monitoring software.

Statseeker is a product has an interesting three pronged proposition:

- Poll all ports all the way to the edge (not just uplinks and WAN)

- Poll them every 60 seconds

- Keep all the data gathered – don’t roll it up and lose the detail

If you run network performance monitoring software right now – maybe SolarWinds, What’s Up Gold, MRTG, VitalNet or similar, you probably recognize that these are somewhat unusual features for a product in this space. But is it any good, and why would you want it?

Statseeker’s main focus is to allow you to monitor every port in your network, right down to the access layer, to track key statistics for each port once a minute, and to retain that data indefinitely (assuming you have enough storage).

Why Edge Ports?

Statseeker’s argument is that because of scaling problems, many people end up removing monitoring on edge ports and limit themselves to key uplinks and WAN ports, thus potentially missing the source of network issues. My experience bears this out – I’ve often had to set up temporary monitoring on specific ports in order to get data to support a troubleshooting effort, because we just haven’t been able to justify polling every port all the time. So at least from the perspective of looking back at recent events, having data on every port could certainly be helpful.

Why Once A Minute?

Monitoring once a minute gives a pretty good level of granularity for the data collection. My experience though has been that trying to set up my NMS tools to poll that often has led to problems on the server side trying to process so much data, and the more ports being polled, the worse it got. The easy solution was to back off the polling to be less frequent.

Despite this, Statseeker claim the product can scale up to pretty much any number of ports without reducing polling intervals, although the server hardware must play a part in that equation. Given that a well loaded access layer switch could have upwards of 500 ports, and knowing how inefficient SNMP can be, I asked whether Statseeker had seen any problems on the polled devices with CPU as a result of this intense polling. The answer was that Statseeker doesn’t poll all interfaces as a block, but instead uses an undisclosed algorithm to try and determine the capability of the device to answer SNMP queries, then spreads out the polling requests over each 60 second period in order to minimize the instantaneous effect of any polling and try to keep the request level to below half of the determined capability. This could be very important not least because you may also still be running other management products that are polling the same devices, so any software that just sucks data as fast as possible could be a real problem. In fact this is likely to be the case, because as we will see, Statseeker is not going to answer every question you have.

Why Retain The Data?

Data “roll up” is something that every other product I’ve used does as standard – when you look back at data from 3 months back, even if data were originally captured on a per minute basis, that detail will have been lost and you’ll be viewing aggregated (i.e. averaged, lower resolution) data instead. With the cost of storage in the past, that policy made a lot of sense, but storage is cheaper now and Statseeker estimates that you’ll need only 1GB of storage for each 1000 interfaces per year of data.

Lots Of Data == Slow Access?

I was surprised, because no – the demo system wasn’t even close to being slow. You access Statseeker via a web browser and can run a range of reports on all monitored elements, or you can drill down to regions, sites, devices, and so forth. Here’s the main interface page (sorry for quality of the picture), and you can see the list of report types on the left hand side, then the drill-down filters in the next columns:

Given the number of ports being monitored (over 20,000 on the demo site), it was fast. I’m used to seeing products that are slow to generate tables of results, and in some cases extremely slow to generate charts from the collected data. Statseeker caught me off guard by generating every page – even those where it was sorting data from all ports – in 2 to 5 seconds. I should note that we viewed this demo in San Jose accessing their live demo system which is based in Australia. The claim is that due to the specially developed “time-series database”, typically any report can be displayed in just a couple of seconds, and based on what I saw this does seem to be accurate. I do not know, of course, how phenomenally powerful their demo server might be and how much investment might be required on a customer’s part to see similar performance. Fast is not necessarily a unique selling point for Statseeker of course – and given a sufficiently well-specified server, other products may be able to perform similarly – but given that there’s no roll up of data, to keep performing that way with per-minute data going back into the past is really quite impressive.

What Doesn’t Statseeker Do?

There’s quite a lot that Statseeker doesn’t do actually, and oddly that’s part of the charm. Statseeker does not claim to be the one solution for all your problems; rather it has a sweet spot – monitoring every port, regularly, and retaining the data – and it does that very well. The presenters were refreshingly candid about where the product excelled, and where it didn’t, and it’s acknowledged that for some things, you may want other products (presumably in addition to theirs, not instead of!).

Trending

Trending is not an option in Statseeker – you will not see reports telling you that a port will hit capacity in 3 months. We’re told, somewhat tongue in cheek, that the math is “too hard”. I don’t know quite how to take that response, quite honestly. Other vendors have managed this in one way or another, but I do appreciate the wild approximations that go into any trend line, and that there are a lot of assumptions and compromises required in order to offer trending. It seems a huge shame though to have so much great data and not to be able to forecast at all. The closest you get is the ability to set thresholds that you can use to highlight potential problem ports. SLA and threshold violations can be set to trigger action scripts to send emails or other alerts as needed.

Dashboards

For me, from a usability perspective, Statseeker’s big miss is that there isn’t a single place I can look to find out the health of my network. I know, it’s all a bit “pointy haired boss” to want a traffic light page, but if the person ultimately paying for the tool can see how the network is doing at a glance, presented in a way that won’t require much effort on their part, I believe it assists in justifying the purchase they made.

Arguably, what defines ‘healthy’ may be down to personal preference, but I found myself questioning how best to use the tool. If you have nothing better to do than to spend the day drilling down on the TopN reports in order to eliminate bad things from the network, you can do so quickly and efficiently. On the other hand, when trying to find out where you should start spending your time digging, we come up a bit short. There are threshold reports available, so you can see ports that have crossed thresholds that you define, but it felt like you would have to page through multiple types reports to figure out whether you needed to fix anything, and you might be missing a huge problem that’s a wild exception to previous data because it’s on a report further down the list that you have yet checked.

MAC/IP/Interface Report

Statseeker offers a useful report that links MAC addresses with their associated IP and the interface they connect to. This is very handy for troubleshooting, but is only updated every 60 minutes, with no historical data being retained. There are definitely compromises involved in tracking this kind of data, but the ability to look back and say that on a particular day and time, I know where a specific device was plugged in, or which MAC address had a particular IP, could be very powerful. Tie that with the ability to then see what the device did on its connected port on a per-minute data basis, and this could add another dimension to troubleshooting certain kinds of problem.

Interesting Features

Picking aside, Statseeker does have a few very neat features. For example, when you look at historical data – say, the last 30 days’ of utilization for a port, in other software I have in the past been presented with the same size chart but with the data for 30 days squeezed into the same x-axis. Statseeker has realized that this just doesn’t work, and they show daily charts all nicely aligned by weekday so that you can easily compare week to week, day to day:

Simple and effective; you can spot patterns very easily this way, and again, the charts appear quickly.

What Gets Monitored?

Statseeker monitors interfaces using a specific set of what they believe are the most common OIDs related to network problems. That list is generally not editable, and that’s kind of the catch throughout the product; while you can customize searches and charting, everything is so optimized in order to provide good performance and data storage, you largely have to accept the decisions made by Statseeker. Whether that’s a bad thing depends on how you view this product. As I said before, it has a relatively limited set of functionality that it appears to perform extremely well. If you’re an inveterate tweaker and customizer though, you’ll want to spend some time with the product and the sales/tech folks in order to determine whether or not you’ll be frustrated down the line.

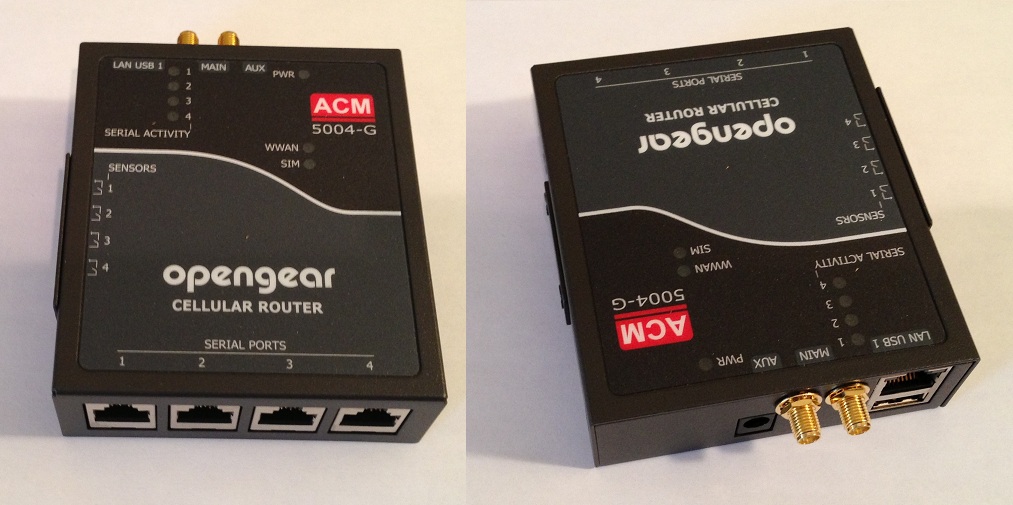

Hardware

You provide the hardware to run Statseeker – they don’t offer an appliance. We asked whether it ran well in a VM, and the answer was that while it will run, we should be aware that they use knowledge of the server’s storage controllers to optimize data read/write operations, and those didn’t work on virtualized controllers. In other words, you really want to run this on dedicated hardware. I know, how very 2000s, right?

Do I Need Statseeker?

Statseeker were pretty up front and said that if you have less than 1000 ports to monitor, you’re much better served by other products.1000 ports is where their licensing begins – which would cost somewhere around $8000, and it goes upwards at least to 500,000 ports. It doesn’t just monitor network ports; you can monitor server interfaces, and you can even monitor printer toner status, although whether it’s useful to deploy a tool to monitor toner status by the minute is highly questionable to me. But maybe your toner is more mission critical than mine.

Do you need Statseeker? I have to say that for troubleshooting port behavior, and comparing current activity to previous behavior, this idea of monitoring everything and keeping all the data is really attractive. If you can spend the time browsing for problems and digging into them, it could be a great tool to identify problems. However, it isn’t a proactive tool in my opinion – it isn’t warning you that a port has dramatically changed its behavior; it isn’t giving you a heads up that you’re rapidly heading towards a capacity problem on a link; it isn’t giving you an “at a glance” dashboard on your network status. On the other hand, I’d argue that monitoring the number of ports it can handle, as frequently as it does, while retaining all data indefinitely, gives it a unique place in your NMS arsenal. But whatever happens, you need to be clear that it probably won’t be your only tool.

Conclusions

What Statseeker does, it does well – and it may be the fastest tool I’ve seen so far, with drill downs, data display, charting, and re-sorting all operating amazingly quickly. What it doesn’t do, well, you’re going to have to fill the gap with something else. And if you have less than 1000 ports, frankly you can make mrtg or similar do the same thing without having to scale your NMS server up to an insane degree. Either way, I can see that Statseeker will definitely be on my list of vendors to include next time I’m involved in an a network performance monitoring product selection process.

Watch The Presentation from NFD4

NFD4 presentations are streamed live, then offered for online viewing later. If you’re interested to hear more about the product, have a look at the video of the presentations at the link below, and make up your own mind:

http://techfieldday.com/appearance/statseeker-presents-at-networking-field-day-4/

Get Some Other Points of View

Here are some blog posts from other NFD4 delegates so you can get their take on Statseeker:

- Network Management System NMS – Statseeker (Brent Salisbury, @networkstatic)

- When You Get Into A Fight The Person With The Best Notes Wins (Anthony Burke, @pandom_)

- Statseeker – Does It Fit Your Organization? (Paul Stewart, @packetu)

I’ll update this list as I become aware of other posts, and these are good blogs to subscribe to even if Statseeker isn’t your primary interest!

Disclosure

Statseeker was a presenter at Networking Field Day 4, and while I received no compensation for my attendance at this event, my travel, accommodation and meals were provided. I was explicitly not required or obligated to blog, tweet, or otherwise write about or endorse the sponsors, but if I choose to do so I am free to give my honest opinions about the vendors and their products, whether positive or negative.

Please see my Disclosures page for more information.

Hi John,

A great article very in-depth, detailed and precise.

Another feature that I really liked was the switch port count utilization. By collecting the data from every port on a specific switches the product can tell administrators which ports haven’t seen any traffic in say 30 or 45 or 60 days. Those ports can then be reclaimed (un-patched in the closet). I know a number of large organizations that would purchase this solution just for that feature alone.

Cheers!

Thanks, Michael, and agreed – that was a neat feature.

I seem to recall you can do something similar with Ciscoworks RME Campus Manager (Cisco only, of course), Solarwinds User Device Tracker, and possibly even with Vitalnet, but I don’t have access to any of those right now to be able to cite specifics.

Tracking unused ports over time should also be part of any organization’s capacity planning – to make sure they’re using what they have effectively, to see the rate at which ports are being used up, and thus to ensure they don’t run out unexpectedly!

Great read John. On capacity planning, what are the thresholds you normally recommend for link capacity upgrades? I have been thinking on this lately and kind of landed at %65 for single homed links (figure thats 90-120 of breather before risking %90) and 50% for dual homed closer to core links for N+1 diversity. Those sound reasonable to you?

Thanks! Brent

Hi Brent. Well actually if I’m doing capacity properly (whatever that really means – highly subjective of course), I’ll also be doing forecasting based on trends. At that point, so long as you have data collected over a reasonable time period (ideally at least 6 months), actual utilization matters far less than the trend that’s developing, because that’s what will really let you know how many days out your capacity will be reached, assuming no major changes. Even that is a moving target, but a link at 65% utilization with a steep upward trend is far more critical to upgrade than o ne at 65% that has been rising at 1% per month for the last year.

Putting that aside, I’ve used 65% for a single path before. For dual uplinked or N+1, it honestly depends on your topology, the level of redundancy, and what you anticipate the impact being of a failure, and what your business or apps can tolerate. Funnily enough I have a half-written draft post on this exact topic, and there’s no simple answer. To take your example of 50% threshold on an N+1, it depends how big ‘N’ is, and whether there’s a likelihood that more than just the +1 link might fail (and in turn whether you have additional links ready to step up and take their place controlled via max-links or similar). If N is 1 – i.e. you have 2 links aggregated – then arguably 50% utilization on each link means that in the event of a failure, you have no headroom at all. Does that matter? Well, it depends how quickly you can restore the link, but probably it’s ok for operational purposes to run at 100% on the remaining link for a day. However If you only trigger your ‘upgrade my link’ process at 50% aggregate utilization, then by the time you’re ready to upgrade you may be running at, say, 60% utilization. At that point a link failure means running at 120% on the remaining link, aka dropping data. In some instances that might be acceptable for a period of time, but in many cases that would be absolutely unacceptable. On that basis, for capacity planning on a 2GB aggregated ethernet link, your threshold to trigger upgrade plans would be depressingly low-sounding. If you have 4 links in play, the numbers may change again, depending on your likely loss of one or more links (e.g. if 2 links go to the same chassis and linecard, is it likely that a linecard failure would occur, stripping you of half your bandwidth? How about if you spread each uplink across different linecards so you would hope to only ever lose 1 out of 4 links?

Didn’t really answer your questions, did I? 🙂

If my BOTE calc is right then even with 4 x 1G links you would want a threshold of 2.67Gbps if the goal is not to drop anything in the event of 1 link failure. That’s probably best case scenario based on this ether-channel load-balancing description:

http://www.cisco.com/c/en/us/support/docs/lan-switching/etherchannel/12023-4.html

I think Nortel/Avaya’s traditionally had a load balancing algorithm that would put 1/2 the traffic on 1 of the 3 links meaning the threshold would be 2Gbps.

I don’t know about Nortel/Avaya load balancing, but LAG links on legacy Cisco IOS switches (e.g. 7600) were always best deployed in 2,4 or 8-link groups. The load balancing algorithm very helpfully (as I recall) hashed to one of 8 values, and those values were mapped to LAG members. If you lose a link, the values are re-mapped. On a 4-link LAG that meant a mapped ratio of 2:2:2:2, but sadly, 8 doesn’t go very well into, say, 3 (if you lost 1 of those 4 links) and you would end up with the traffic being split 3:3:2 instead. It was even worse with 8 member LAG, where you’d go from 1:1:1:1:1:1:1:1 to 2:1:1:1:1:1:1 ratio, thus loading one link at twice the ratio of the others.

I understand that has changed now, and again it’s something that feeds into the decision about where is sensible to set your thresholds, how many links to put in a LAG based on the vendor and OS you’re using, and how to deploy things like min-links or backup LAG members to minimize failure impact.

Thresholds really can be one of those “how long is a piece of string” questions I fear; not least because my string is different to your string.