When Plexxi was announced as a presenter at Networking Field Day 5 (NFD5), I had no idea who they were – and I’m guessing many readers are in the same boat. Plexxi want to be your core Ethernet network, but in a rather unique way.

So here’s the concept: What if you could create a physical ring of Ethernet switches that were actually fully meshed so that any switch could talk to any other switch without any Ethernet switching along the way? And how about if you could dynamically change the bandwidth available between switches as needed? Then finally, how about trying to generate an optimal connectivity mesh based on application needs?

Yeah, that sounds like fun to me too!

The Plexxi Solution

Plexxi’s solution is an Ethernet switch (the complicatedly-named Plexxi Switch 1) that’s pretty ordinary in many respects – it’s a 1RU switch with 32 x 10GbE SFP+ ports and 2 x 40GbE QSFP+ ports. Oh, and two “LightRail™” interfaces – which are, arguably, the special sauce in this switch.

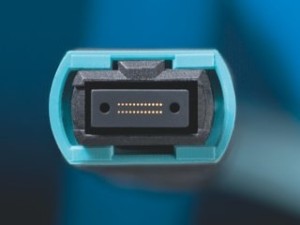

MPO Ports

The LightRail™ interface uses MPO compatible connectors. The Multi-fiber Push-On (MPO) interface is a connector that links up multiple parallel fibers at the same time – rather than the usual two fibers in, say, an SFP:

The image above is from R&M, who have a detailed 16-page PDF describing the need for parallel optics, and specifically the MPO/MTP™ interface.

In the image above the connector terminates 24 fibers – 12 transmit and 12 receive, which at 10Gbps each would mean 120Gbps full duplex in a single cable. Since the Plexxi Switch has two of these, it has 240Gbps of capacity using the two ports.

Correction: (see comment from Mat Matthews) Plexxi uses a 12-way MPO with 6 Tx and 6 RX, and to allow for 12 channels they use Dense Wavelength Multiplexing (DWM) to get two 10Gbps lambdas on each fiber pair.

Connectivity

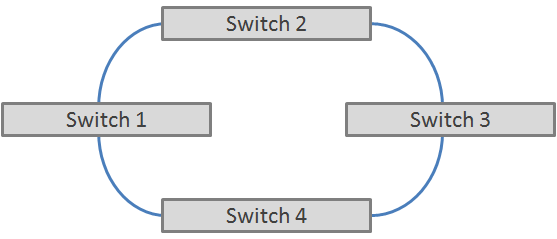

The MPO ports are the uplink / downlink on each switch that provide the ring topology for us:

So apart from the fact that the ring runs at 120Gbps, so far this looks like any other switch stack doesn’t it? It does; and this is where it gets complicated. For a device connected to Switch 1 to talk to a device connected to Switch 3, it has to pass through either Switch 2 or Switch 4. That’s suboptimal – we don’t really want the additional latency of a frame being received, a switching decision made, and then it being retransmitted towards the destination. This is why leaf-spine architectures are popular – every leaf switch uplinks to every spine switch, so the number of switched hops between any two connected devices is minimized. In this case we can consider the Plexxi ring to be a replacement for the spine switches – but Plexxi don’t want you to have to have multiple uplinks from the leaf switches; they want the meshing to take place in the ring.

Full Mesh Topology

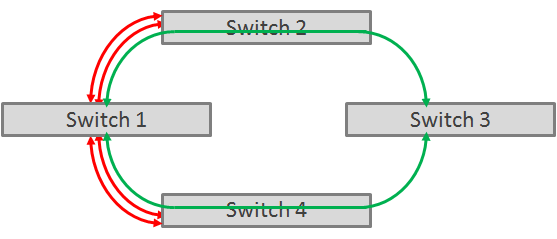

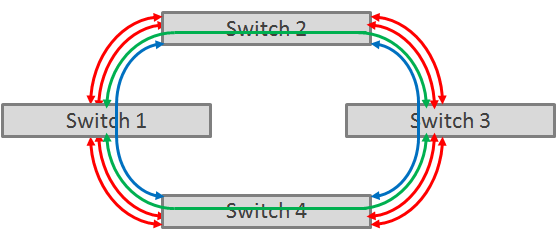

Now the LightRail™ comes into its own. The 120Gbps uplink and downlink ports are not actually configured as point to point links with the adjacent switches. Instead, each switch is capable of either terminating a wavelength or optically switching it (quite likely with a mirror) so that from a light perspective, a switch that optically switches the wave effectively doesn’t exist in the path. For example, imagine we have 4 wavelengths on each uplink/downlink port instead of 12 (just to simplify things in our example). Switch 1 will have two 10Gbps wavelengths terminating on each neighboring switch, and one 10Gbps wavelength in each direction (optically switched through to Switch 3). Here’s the view from Switch 1’s perspective – it has 20Gbps to each directly attached neighbor, and 20Gbps (10Gbps in each direction) to Switch 3:

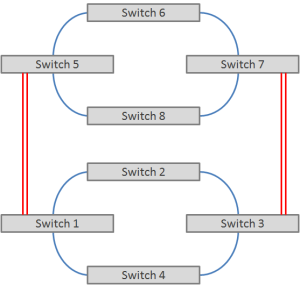

I said that there were 4 wavelengths in the example, and I’ve only accounted for three of them. The fourth will be optically switching a 10Gbps wavelength between Switches 2 and 4. So adding in the connectivity for the other switches as well, it looks like this:

Note that every switch in the ring has 20Gbps directly to every other switch – a single hop. In the real switch, LightRail™ has 12 wavelengths in each direction. I’ll spare you (and me) the pain of seeing that diagram, but the basis for connectivity is that there is 40Gbps to each neighboring switch instead of 20 in my example, and then there would be 20Gbps in each direction to other switches in the ring. Doing some swift math, that means that you can have a 20Gbps full mesh for up to 11 switches in a ring – i.e. each switch has at least a 20Gbps direct connection to 5 switches either side of it.

Bandwidth Management

Each switch has 32 10GbE ports, so it’s conceivable that you may need more than 20Gbps of throughput to another switch. Helpfully, while the direct 20Gbps is the preferable path (because it’s lower latency), it is also possible to push traffic over another link and let the traffic be switched on the way there. In my example with 4 switches, traffic could be sent from Switch 1 to Switches 2 or 4 in order to make its way over to Switch 3, as well as going on the direct links from Switch 1 to Switch 3.

Confused yet? We’re not done. The ring can grow beyond 11 switches – but you will be forced to send traffic through other switches if you need to talk to switches further away than 5 either side of you. This might sound like a scalability problem (and in some ways it is), but Plexxi also mentioned that they are looking at ways to scale the rings vertically, e.g.:

I should emphasize that this is road map speculation, so who knows if it will actually end up like that. It wasn’t clear to me if this connectivity would somehow use LightRail™ or would utilize the 40GbE ports as an interconnect, or – potentially – if there might be a Plexxi Switch 2 that might have more LightRail™ ports for vertical fun. I guess we’ll see.

But Wait, There’s More

Not content with creating an 11-switch 20Gbps fully meshed topology, where would we be without the buzzword that’s almost out-buzzing “cloud” – SDN (Software Defined Networking)? Yeah, they got that too – and in fact they push it as one of the primary selling points. Out of the box, the Plexxi switches have a default topology that they will create, but once the controller comes online, the network can be changed based on configured policy.

To be clear, the Plexxi controller (product name: Plexxi Control) is not a real-time in-flow controller. Instead, periodically (at a configurable interval) the controller calculates an optimal topology based on the policies that are configured and it configures the switches as required.

What’s to configure? Well, if you recall from earlier in this post, I said that each fiber on the MPO could either be terminated locally, or optically switched through. That’s not a fixed configuration – it’s dynamic, and any fiber anywhere in the ring can have its mode switched from termination to passthrough.

The controller monitors link throughput (basic port counters) and can react automatically to provision more bandwidth between switches if it’s required, and reduce bandwidth elsewhere if there’s a lighter load. The more complex policy control comes with what Plexxi call Affinities – and in fact the call this solution Affinity Networking. The idea seems to be that if you know, for example, that the finance servers on Switch 1 need to talk to the Oracle servers on Switch 2, and low latency is critically important to those flows, you can use the Control software to create a policy that asks for that traffic to travel on its own wavelength perhaps (you can either make this isolation permanent, or keep it isolated when possible), and Plexxi Control will send out the necessary configuration to tell Switch 1 and Switch 2 to treat that application traffic differently and – if necessary – to change the default path for non-affinity traffic to keep it out of the way. Isolation must be treated with care – you are blowing out a 10Gbps link if you choose to isolate traffic. Using the ‘isolate when possible’ mode tries to keep the affinity traffic on its own dedicated link unless bandwidth gets tight, in which case it may have to put other traffic on that same link. Still, that’s something you can’t do in a regular network.

Oh – and there’s no Spanning Tree in the mesh; this is multi-pathing layer 2.

I am sure I’m selling the Controller short here – so please do watch the video below to hear direct from Plexxi all the things it can do.

Maintenance And Failures

One more thing that’s important to understand is that if a switch fails, the optics fail closed – in other words, the pass-through traffic continues without interruption.

If you want to add a switch, remove a switch or otherwise interrupt the links between them, the controller can be asked to migrate traffic off the affected switch / links (obviously you want to do this during a low traffic period, since you’ll be reducing bandwidth in the mesh). The idea here is that you can make those changes without interrupting traffic as would happen in a classic network. By proactively migrating traffic away, there’s no active traffic to impact.

The SDN Gap

This isn’t just a Plexxi issue, but there’s a gap here. Defining affinities is a manual process; there’s not (yet) an ability to identify heavy flows in the network and offer them up to you as potential affinities. After all, if you ask a server admin what remote nodes their server talks to, you’ll often get no response. The same applies if you ask the application owners – each team knows what they need to know, but may not truly understand the flows involved, and that’s going to make the process of creating affinities a challenge in some environments.

Conclusions

I like this product! If you’re creating a meshed network, then just in terms of the reduced cabling, this looks like a win. Plexxi are a fairly new company, which may cause concern for some. Everybody’s new at some point though, and if Plexxi can continue to get reference sites using their hardware, it will help their case with nervous buyers. I’m less certain about the benefits of the Controller, beyond basic bandwidth management – I’m not sure how it will work in production maintaining the affinities.

As with many of the NFD reviews, I have not had hands on to try this out, so I cannot speak to how well this will work in a real network. However, I’ll be watching them with interest. If nothing else, I’ll watch their online shop in case they have a sale on “I’m Plexxi And I Know It” T-shirts.

Check Out Plexxi’s Presentations at NFD5

Make up your own mind and watch Plexxi tell you themselves.

Introduction

The Architecture Explained

The Controller Explained

Disclosures

Plexxi was a paid presenter at Networking Field Day 5, and while I received no compensation for my attendance at this event, my travel, accommodation and meals were paid for by NFD5. I was explicitly not required or obligated to blog, tweet, or otherwise write about or endorse the sponsors, but if I choose to do so I am free to give my honest opinions about the vendors and their products, whether positive or negative.

Please see my Disclosures page for more information.

Hi John,

Excellent review, thanks very much! And great to meet you last week!

Couple of minor clarifications. On our fiber, we actually use a 12-fiber cable (6 pairs) not 24. Since we use WDM-based optics, we can carry multiple channels over each fiber, and therefore achieve the full 24 channels with just 12 fibers. This is just a minor correction, the same would work with 24 fibers, we just didn’t need that many.

On the Affinity configuration – in a sense you are correct that it is a manual process. But this is where the power of SDN really shines through. For example, our integration with Boundary (a really cool next-gen APM tool) shows how we can automagically use data generated by telemetry tools to capture affinity information that can then be used to adjust topologies. This could be completely automated since everything Control does is managed via REST-based APIs. We’ll be demo’ing this integration on SDNCentral Fri 3/15 (link here for anyone that is interested in seeing this: http://www.sdncentral.com/companies/sdn-demofriday-with-plexxi-and-boundary/2013/02/).

On the ring scalability… the Switch 1 ring can grow to arbitrary sizes. Yes it is true that if affinities span more than the 5 east/west reach, we have to bridge together optical channels, but that is done in the optical domain as well. Also, many customers do create smaller rings and connect them using the electrical 40 GbE ports (or 10 GbE ports) and we’ll intelligently load balance over all inter-ring connections.

But for true hyperscale designs, our roadmap does also involve a way to connect rings optically, which will allow a huge variety of very scalable designs (rings of rings, etc). Google (verb not noun) torus (or see this paper: http://users.ecel.ufl.edu/~wangd/JOCN2011.pdf for some hints at what we have planned.) The vision is a huge pool of capacity that you can use the way you want to, or have it be driven automatically by application affinities using smart SDN-based control software.

Thanks for the corrections and additional information, Mat. I’ve added a correction to the MPO description.

The Boundary integration sounds like exactly the kind of thing that will be needed to make intelligent decisions about provisioning when there are hundreds or thousands of affinities that may need to be set up in a busy DC environment.

I’ll read up on Torus – if we can see this scaling in 3D as it were, it could be incredibly powerful.

Hi John – great article – thanks. A minor point:

“So apart from the fact that the ring runs at 120Gbps”.

Each uplink runs at 120Gbps FD, and each switch has 2 of these.

The speed of the ring itself actually depends on how many devices are attached to it. An 11 switch ring has a bisection bandwidth of 1.28Tbps.