Cisco Virtual Port Channels (vPC)

I was asked to explain Virtual Port Channels recently, and figured that I may as well share some thoughts about this useful technology as well as some potential pitfalls.

vPC solves problems that most people are likely to have in their network; STP recalculations and unused capacity in redundant layer 2 uplinks.

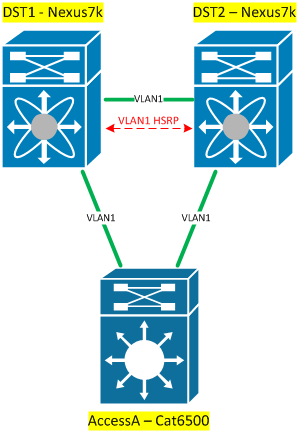

Let’s look at a classic scenario where this can happen:

The network shown in this diagram clearly has resiliency of sorts for the access layer by way of dual uplinks, but that redundancy has also introduced a Layer 2 loop. Spanning Tree Protocol (STP) will deal with the loop for us and ensure that we have a single active path, but that has a couple of down sides:

- One of our uplinks will be blocked (unused bandwidth); and

- When a topology change occurs, we trigger a Topology Change Notification (TCN) that will cause STP to recalculate – which means an interruption to the traffic – and CAM (layer 2 forwarding) tables to be prematurely flushed, leading to short term flooding when frames start flowing again).

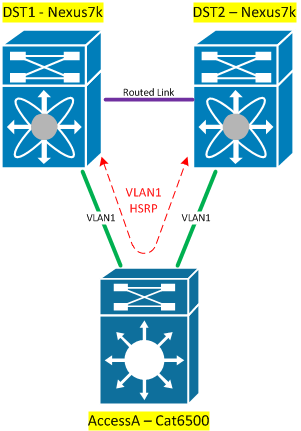

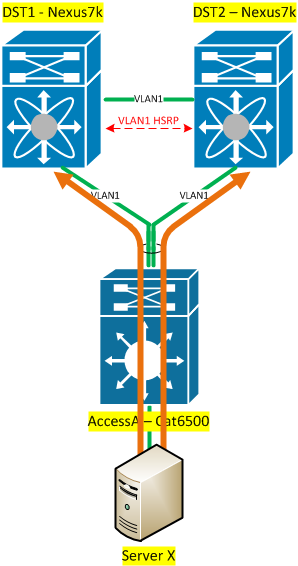

So how can we avoid that loop so that we never see an STP recalculation? One obvious solution is to not create the loop in the first place. This can be achieved if we avoid bridging any of the uplinked vlans between the two distribution switches:

HSRP/VRRP traffic is reliant on the access switch itself to let the two DSTs see each other, but this actually isn’t a real problem. This design works well up to a point, but as you add additional access switches that carry one or more of the the same VLANs as another access switch, it gets messier:?

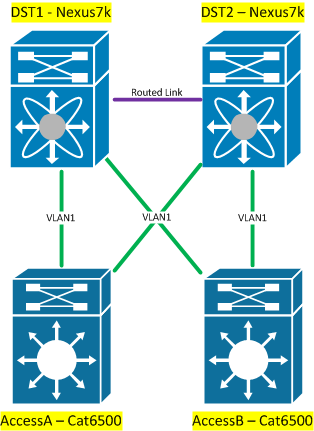

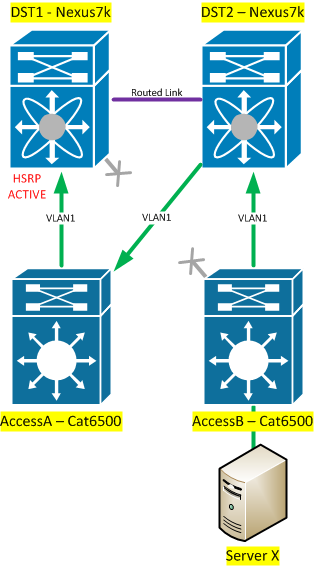

In this scenario you not only reintroduce the layer 2 loop you were trying to get rid of in the first place, but now the access layer is a likely to become a transit if there’s a link failure:

Traffic destined for the active HSRP gateway from ServerX will flow from AccessB -> DST2 -> AccessA -> DST1. This is really unacceptable -you do not want your access layer to be in the layer 2 forwarding path. That said, if each of your access switches has dedicated vlan(s) that won’t show up on other switches, then have at it!

vPC

vPC proposes an alternative solution: make the redundant uplinks look like a single link so that technically (from a layer 2 perspective) it no longer looks like there is a loop. vPC achieves this by treating the two uplinks as a special kind of etherchannel, with a single device on one end (e.g. the access switch), and two devices on the other (e.g. the distribution switches):

Logically speaking – since the distribution switches are providing a virtual port-channel, this is the equivalent of having a point to point link:

Clearly, the distribution switches will need to behave in a rather unusual way to allow this to work, but interestingly the access switch doesn’t need any special capabilities; from its perspective, this is just a regular old port-channel. This is a great selling point in terms of migrating a non-vPC network to a vPC architecture – end devices don’t need to undergo a forklift upgrade; only the distribution layer in this example would need to be upgraded to add vPC. This also means that vPC could be used with servers as well as network devices; so long as they can use LACP, they’re good to go. In the case of servers, of course, they would have been edge ports anyway and should not be bridging frames and causing a loop – but the point is, it’s possible to do. Maybe more usefully, it also opens up the possibility of having non-Cisco LACP-capable devices being able to use vPC uplinks.

So how does vPC help? Well, it has a number of benefits. First, logically speaking there’s no layer 2 loop any more so the architecture is immediately improved. Secondly, because there’s no loop, STP doesn’t have to block either of the uplinks, meaning you can utilize the full bandwidth of your port-channel:

In reality, this benefit giveth with one hand and taketh away with the other – if a DST should fail (and you thus lose half your uplinks) – the remaining links still have to be able to handle the full load; so in theory in a lossless network, you have to ensure that your port-channel never exceeds 50% utilization, or you’ll be oversubscribed after a failover. In some networks, oversubscription (to some degree) during failure can be a compromise that the business will accept in order to maximize throughput the rest of the time. Personally, I lean away from that model, but the, oversubscription in the WAN makes me twitchy as well so maybe I’m overly cautious!

Thirdly, if one of the uplinks fails there is no logical topology change (just a reduction in bandwidth for the link), and consequently STP doesn’t have to recalculate.

The final benefit, the one that helps your capacity planners sit a little more comfortably, is that using a vPC means that your uplink traffic is split 50/50 across both distribution switches, subject to the traffic patterns traversing the link and the etherchannel load-balancing algorithm chosen. There’s a gotcha here, which we’ll look at in a moment. However, the idea of running uplinks at 50% utilization and sharing traffic equally across both distribution switches is one that should make capacity planners delighted on one hand (everything is ticking over with middling utilization) and hating you on the other (failover triggers now become more complex). If your hardware upgrade budget funding is based on presenting scary capacity triggers to upper management, you’d best avoid vPC and run your one non-blocked uplink at 100% so you can justify some upgrades (nobody ever mentions the other uplink running at 0% utilization). Who would pay for an upgrade on a network that runs at 50% capacity? So some education may be required so that the people with the money understand what these new evenly balanced utilization figures really mean to the network. I’m a big fan though of spreading the load where appropriate, so to me this is a big win.

You may be wondering how well HSRP/VRRP configured on the distribution switches works when using vPC. If traffic is shared over the uplinks, it would make sense that half the traffic would end up being passed over the link between DST2 and DST1 in order to reach an active HSRP MAC address on DST1, thus saturating the inter-chassis link as well as spoiling the benefits of the load sharing and adding another layer 2 hop in the path of half your frames. Thankfully vPC is smarter than that, and while you nominally have an active and standby gateway configured, in actuality both distribution switches will accept traffic destined for the HSRP MAC address and will route them based on their local routing tables. There’s a subtle implication to consider here; the DSTs are independent devices with their own routing processes, so it is critical to ensure that both have pretty much identical routing tables (or at least, equivalent connectivity). If they don’t, we have have a situation that’s a little challenging to troubleshoot where half of our flows seem to fail (the half going to the router with the bad routing table).

I mentioned a gotcha with the load sharing, and it’s this: the load sharing works perfectly well in a Northbound direction – i.e. from the access switch to the distribution switch. However, return traffic is not automagically load-balanced from the distribution layer back to the access layer. Traffic destined for a vPC-connected access switch will be forwarded directly from the distribution switch that received it. When you think about it, this makes sense – if vPC attempted to load-balance traffic Southbound, each DST would have to make a load-balancing decision, figure out that a particular frame should be going via the other distribution switch, and would then have to send it across the interchassis link to the other switch, in the hope that the other switch agrees on the load-balancing decision; and again, since the switches are indepedently configured, a misconfiguration where different etherchannel load-balancing algorithms were configured on each switch could be catastrophic. What this ultimately means is that ideally the network should be architected to ensure that traffic destined for the access layer is received on both DST1 and DST2 equally.

- If you have non-vPC HSRP/VRRP-enabled vlans on the same distribution switches as your vPC vlans and there will be traffic flowing between non-vPC and vPC vlans, you may want to evaluate whether traffic levels justify considering GLBP or balancing alternate non-VPC vlan active gateways between the DSTs.

- Links from Distribution to Core may need to be full mesh (i.e. dual uplinks from each DST) rather than a box topology, to ensure that regardless of which core router traffic hits, it can be load balanced to both distribution switches.

Requirements

In order to make vPC function, you need a layer 2 etherchannel between (in this example) the two DST switches. This vPC “peer-link” is dedicated to carrying the vlans that are being carried over the vPC uplinks. Typically you’ll want to ensure that 10gig ports used for this purpose are in dedicated mode, to avoid oversubscription issues and potential packet loss.

Additionally a separate link is required between the DSTs purely for control purposes – the “keepalive” link. This is a layer 3 link between the chassis; it’s not expected to carry a significant volume of traffic, but it’s critical. It doesn’t have to be an etherchannel, but it may make sense to do so for resiliency purposes in case a line card fails – but arguably (depending on your port utilizations) it is ok to use a port in shared mode for this purpose. The keepalive link is configured to sit in its own vrf, so you can basically re-use the same keepalive peer IPs on every pair of devices, as they’ll never be seen (helps with template configs, right?).

And finally, given that we likely want a routed link between the two DSTs (so that they can route traffic directly between them as required), you’ll probably need yet another port-channel (l2 or l3) over which to route. Cisco advise that you should only trunk vlans that are subject to vPC over the vpc peer-link etherchannel, so you can’t really add your inter-chassis routed link to that.

Support for vPC is available on the Cisco Nexus 5000/7000 series routers so if you don’t already have those – at least for the distribution layer – you’re talking forklift. The rumors (and the leaked picture and investigations rather nicely noted here by FryGuy) suggest that the 7000 series is gaining a 7006 and a 7009 chassis, I would assume sized to fit in the exact same space as the 6506/09 or 7606/09 that they are hoping you will upgrade. This also means moving to NXOS, which is probably another post all in itself. And since it’s NXOS, and pretty much everything is turned off by default, don’t forget to enable the vpc feature if you want it to work. And lacp. And interface-vlan. And hsrp. You get the idea…

Configuration

I don’t want to get buried in the myriad configuration options, but here are a few key items to consider (which I’ll format more nicely when I get the hang of this thing better). This would be the DST1 side of things – I will take it as read that you can reverse commands to make the DST2 config:

! enable features feature vpc feature lacp ! Define a vrf for the keepalive traffic vrf context vpc-ka ! Configure vpc domain - this switch (in the vpc-ka vrf) is 10.0.0.1; its partner is 10.0.0.2 vpc domain 1 role priority 100 system-priority 100 peer-keeplive destination 10.0.0.2 source 10.0.0.1 vrf vpc-ka peer-gateway ! Configure the VPC keepalive interface (port-channel or single interface) interface Ethernet 1/1 vrf member vpc-ka ip address 10.0.0.1/31 no shutdown ! Configure the layer 2 interchassis peer-link interface Ethernet 2/1 channel-group 1 force mode active no shutdown interface Ethernet 3/1 channel-group 1 force mode active no shutdown interface port-channel1 switchport switchport mode trunk switchport trunk allowed vlan a,b,c vpc peer-link spanning-tree port type normal no shut ! Configure the actual vPC facing the downstream device ! For the 'vpc xxx' command: ! 1) use a different xxx for each port-channel to a downstream device; ! 2) ensure that the 'partner' port-channel on DST2 uses the same xxx; ! 3) xxx has nothing to do with the vpc domain number configured earlier. ! interface Ethernet 2/8 channel-group 2 force mode active no shut interface Ethernet 3/8 channel-group 2 force mode active no shut interface port-channel2 switchport switchport mode trunk switchport trunk allowed vlan a,b,c vpc 102 no shut !

Conclusions

- vPC is a great solution to some common problems (anything to help me minimize the impact of STP gets my vote!)

- Decide whether you actually need vPC or if an alternative architecture is better

- If you deploy, consider your traffic patterns carefully, both from the perspective of etherchannel loadbalancing algorithms as well as return traffic.

- Work with your capacity management and budget holders to help them understand what the resulting utilization figures really mean.

Hopefully not too many errors in this; feel free to correct and discuss!

Hey John,

IF you’re still checking this blog entry, I have some questions:

a. You list a port-channel config for one device (DST switch) and it shows 2 links between DST devices and 2 links to a down level TOR (access layer), but what does the config look like on DST2?

We have 2 separate 10GB links, one to each DST switch. What I’m looking for is a way to vPC the 2 from the TOR to each DST switches. You talk about it above, but the config beleow doesnt match it.

Thoughts?

-C

Hi Chris.

The config looks almost identical on DST2. Obviously you’ll need to swap the IPs in green so that DST1 in this case is 10.0.0.1 and DST2 is 10.0.0.2, so they can talk to one another. Once the two switches can communicate over Ethernet1/1, they should be able to run VPC properly – and it’ll let you know in the show commands if the peer-link isn’t up properly. Pretty much everything else, config-wise, has to be the same. In particular, as noted, the configuration for Port-Channel2 facing the access/TOR switch) on both DSTs /must/ use ‘vpc 102’ – or more generally, they must both use the same ‘vpc xxx’ command. I just happened to relate the VPC# with the port-channel number for simplicity. Does that make sense?

And then from the TOR perspective, since we’re showing 2 Ethernet links on the port-channel (Po2) to the access-layer, and there would be two more from DST2, in this case it would have 4 Ethernet ports in a single port-channel.

Hope that helps!

Nice Blog .. Very detailed … Thanks alot

Verry interresting John. Thanks

Very good blog. Neat and clear. Good job.

Excellent blog to understand Nexus. Before I read this block I thought NEXUS is alien OS. But now its part of IOS.

All over the net and blogs, everybody talks about only connecting 2 DISTs to a single access switch when it comes to vPC.

can you pls do a write up on the vPCs between 2 DIST VDC to 2 CORE VDCs ?

Well, my “write up” as such, is “Don’t do it”; there’s no point.

Your layer 3 boundary should be at the distribution (aggregation) layer, and the core should be purely layer 3. At that point, you don’t need the benefits of VPC to avoid Spanning Tree, because there shouldn’t be any STP needed. Therefore simply connect your Core/Agg layers with point-to-point layer 3 links and run something like OSPF so that you get dynamic ECMP routing for next hops. Your DIST switch will see an uplink to core routes via both CORE1 and CORE2, and OSPF can protect you against a failure at the core or DIST layer. You may need to tune timers or use helper protocols like BFD to get the speed of failover that you need in your network.

Does that make any sense?

Excellent overview of vPCs John. Even now, 3 years after you wrote it, it helps understand the basics, how it behaves with FHRP and why it does not make any sense to use vPC northbound from DST to core.

I am now searching for the compatibility issues that might arise between vPC peers if I upgrade one of my Nexus 5548 from 5.1.3 to 6.0.2. Would everything remain working or does vPC require both peers to be running the same NXOS version?

A similar question goes for Fibre Channel switching mode. Can I change one of the vPC peers feom the default NPV mode to FC switching without affecting the hosts that are connected to both via vPC using their CNA cards? I think so, but I’m not sure. After all, FC should be unrelated to Ethernet, right?