Cisco launched their Application Centric Infrastructure (ACI) product a couple of weeks ago in New York City, and I was privileged to be able to attend the event as a guest of Gestalt IT’s Tech Field Day.

In gave an overview of the ACI concept and products in a post last week, and in this post I’m looking at how I could deploy ACI and what benefits it may bring to me. As I mentioned previously, I have some reservations about how this is all going to come together in existing networks.

Islands of Cisco ACI

If I understand correctly, Cisco sees a continued usage of existing products like the Nexus 7k and ASR9k in order to provide core routing functionality for “Pods” of ACI-enabled networking on the new Nexus 9k platform. In particular the 7k and ASR would be able to handle the data center interconnect (DCI) part of the equation with technologies like MPLS and Overlay Transport Virtualization (OTV), which are functions unlikely to ever natively arrive in the Nexus 9k platform.

This raises a few interesting questions.

Limited Functionality

If I understand correctly, the Nexus 9k has an intentionally limited feature set. Remember, the NXOS code on the 9k apparently has a 50% simpler code base, so adding in all those other functions would probably mess that up. Can I, then, have a fully Software Defined Network in a given data center? It sounds to me like we will end up with islands of Nexus 9k running ACI, but still need to maintain our legacy core and WAN infrastructure.

Within each island, that’s not a problem, but what about managing traffic between islands? What about managing traffic between data centers? As I understood it, the ACIPAPIC instance discovers and controls its own local network fabric. By that logic, I will need to deploy a resilient ACIPAPIC control plane for each island of ACI that I have. Do they talk to each other? Can they? And with a non-ACI island in the middle, can either of them influence traffic going through the legacy core?

To stretch the island analogy almost transparently thin, is this almost “Ships in the night SDN” where each island (pod) is optimized, but chaos reins once you need leave the island. I’d like to know how Cisco plans to take the next step up the chain and manage the entire infrastructure – perhaps in a hierarchical controller fashion? I’d at least like to know if there’s a solution on the horizon.

Investment Protection

Imagine you have a network with Nexus 7k, maybe with Nexus 5k/2k in play as well. Where does the 9k fit in your network without throwing out existing hardware? You may well want the benefits of ACI, but since you can only get that with the new Nexus 9k, it’s going to be a hard sell.

On the up side, it was a stroke of genius to allow NXOS on the Nexus 9k to run in Classic Mode or ACI-enabled Mode, so that at least in the first instance the hardware can be deployed simply because it provides great value 40G switching capabilities. It sounds like my core and WAN aggregation layers might be safe from replacement, but when we’ve barely got scratches on our existing Nexus hardware I can’t imagine jumping for the new product except in an expansion scenario.

Trickle Down

One hope for integration across the DC was the promise that the open Southbound APIs that the Nexus9k supports will also make their way to the rest of the Nexus range. Specifically, this is the idea that you are no longer limited to talking “through” onePK, but the APIs will be provided natively instead. This is huge, in my opinion. And perhaps this is how some higher level controller could leverage the islands of ACI (and their ACIP’sAPIC’s data) to coordinate over a wider topology.

Is Nexus 9k The Only ACI Platform?

Right now if you want ACI, there’s only one platform – the Nexus 9k. Will that always be the case? Well, Cisco suggests that the ACI capabilities will not be available in the Nexus 7k, but I don’t know whether to believe that (or whether minds will change later on). We were told when the Nexus 7000 launched that it would not have service modules (presumably another great decision from Cisco’s Switching Council at the time), but in February 2013 I wrote about the launch of the NAM-NX1 – a NAM module for the Nexus 7000. We were told that the Catalyst 6500 was out of steam, and the Nexus 7k was the logical progression for that role, then the Sup–2T was released and, subsequently, the Catalyst 6800. So when we’re told that ACI is exclusive to the Nexus 9000 platform, should we believe it?

The custom ASIC that Insieme brought to the Cisco product line resides on the line cards. On that basis, would it not be possible to put the same ASIC on Nexus 7000 line cards? The Nexus 9000 airflow is very clever and for a greenfield installation it looks like a strong choice, but I can’t see why the Nexus 7000 couldn’t also support it, especially as the one of the key selling points of the Nexus 7k was its future-proof expandability, that by upgrading fabric cards and Supervisors, the per-slot backplane capacity could increase dramatically over time, thus protecting your investment. Ok then, so shove the Insieme ASIC on a line card and that way you really do protect my investment.

Ah, but the Nexus 9k ACI-supporting version of NXOS is less complex than regular NXOS (“NXOS in name only,” perhaps?), so integrating ACI into the Nexus 7k’s code might be tricky. It does make me wonder how much of a shared code base the Nexus 7k and 9k versions of NXOS really have, and why this improved simplicity can’t be ported backwards into the Nexus 7k? Or is the simplicity really a result of stripping out features in order to optimize the 9k for a very specific architectural purpose; not necessarily a “simplified” codebase, but more of a cut down codebase?

As a potential Cisco customer with existing Nexus 7k switches, if I were to buy Nexus 9k hardware in order to get the ACI features then later on I found that the ACI features became available on Nexus 7k line cards, I would be pretty disheartened about my investment, I imagine.

Product Comparisons

Pulling some numbers from Cisco’s website, I created the table below to try and compare some similarly-sized products in terms of 10/40 Gigabit Ethernet port densities in order to better understand where the Nexus 9000 fits into Cisco’s product range.

| Product | Slots | Switching Capacity | 10GE Ports | 40GE Ports |

|---|---|---|---|---|

| Nexus 7009 | 9 | 8 Tbps | 768 | 96 |

| Catalyst 6807 | 7 | 11.4Tbps | 56 | 28 |

| Nexus 7710 | 10 | 21 Tbps | 384 | 192 |

| Nexus 9508 | 8 | 30Tbps | 384 | 288 |

I’ve had to make a few assumptions here (I’m not including Nexus 2000 for example), and I’m a little suspicious of some of the figures (e.g. the 7710’s switching capacity which the data sheet says is 42Tbps but I calculate as 21Tbps = 1.32 x 8 x 2). Nonetheless, this puts the Nexus 9508 firmly at the top of the table in terms of port densities and, if I’m right, switching capability as well.

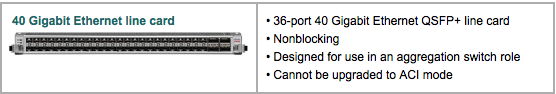

As some people discovered with the Nexus 7000’s varying linecard types though, there’s a little stumbling block here. The 9508 chassis supports three line cards, two 48-port 1/10GE, and one 36-port 40GE. The 40GE card is incredibly dense, but it’s important to read the small print carefully:

The last bullet confirms that this 40GE card will not run ACI mode and cannot be upgraded to ACI mode, so presumably does not have the Insieme ASIC on it yet. Presumably this relegates the current 40GE card to either Classic Mode switching.

The 10GE cards fare better, both claiming that they “Can be used in ACI leaf configurations.” But not spine? Or is the idea that you only need the ASICs on the leaf nodes to encapsulate / decapsulate, and the spine just needs to move IP packets and doesn’t require the Insieme ASIC? If so, that of course relegates any WAN aggregation function to a leaf node, something that Cisco hints at in their VMDC Design Guide 3.0, but that solution utilizes FabricPath so the (“spine” is in fact Layer 2 rather than Layer 3), and it’s not a design that I believe everybody buys into, with some preferring a L3 uplink from the spine to a WAN aggregation point for example. Perhaps we’ll see a VMDC Design Guide update incorporating the Nexus 9k? Or maybe we’ll see ACI-enabled 40G cards coming later? Meanwhile, any investment in the 40GE line cards right now cuts off ACI options later, so should be approached with caution.

Positioning

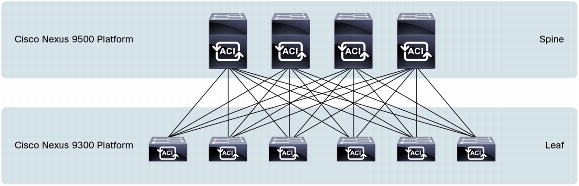

The 9508 is positioned as the spine of a leaf-spine network, but not the core of the network. Cisco’s 9508 product page illustrates the use of the 9508 in a leaf-spine network:

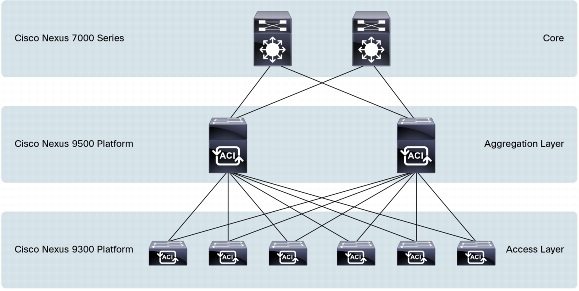

…as aggregation (with 9300s as access below it):

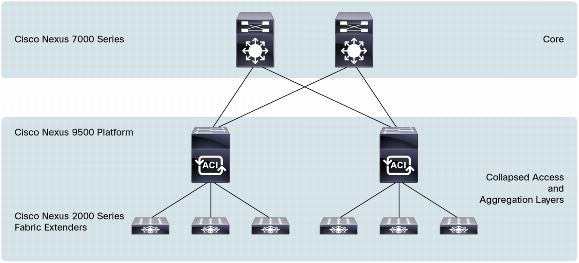

…or as collapsed aggregation/access using Nexus 2000 fabric extenders.

The presence of the Nexus 7000 in two of those three solutions confirms what I was saying above, that a network with multiple pods will have islands of ACI connected by Nexus 7000 (or some other layer 3 solution).

Expressing Opinions

Many of the opinions I have expressed here were already reported by Shamus McGillicuddy in his article on 7000 vs 9000 on TechTarget. I’ve tried to dig a little deeper here than the (necessary) soundbites in that article, because I really think there’s a lot more information needed before I would be ready to advise my clients to take a leap and purchase the Nexus 9000.

I have some sympathy with Cisco here in fact; I would assume that they have had to select which messages to prioritize in their marketing and communications about ACI, and perhaps felt that the bigger uphill battle was explaining ACI and why a hardware-dependent solution is a good deal for customers. That bigger picture is, arguably, far more important to many people than exactly how things work. As an attempted geek though, I can’t help but wonder about the reality of implementing a given solution in a data center, and integrating or migrating my existing network with or to the new solution.

Scheduling went awry and we weren’t able to have a deep technical session with Insieme while we were in New York, and perhaps that has colored my initial impressions of ACI and the Nexus 9000. I definitely find myself thinking that the ‘wait and see’ approach is safest, especially if the ACI technology might show up on a 7700 at some point. Meanwhile, if you want a high density 10/40GBps switch, it seems like the Nexus 9508 is an obvious choice, even if you don’t want ACI (and remember, it will still support a big list of Southbound APIs so it can be still be part of a Software Defined Network even without an APIC controller).

Your Take

Hopefully shortly I’ll be able to fill in some of the knowledge gaps I have, or correct any misunderstandings, and I’d love to be able to come back with an update on my position. I’ll let you know.

So how do you see the Nexus 9000 fitting into your network? Do you share my concerns, or think they’re irrelevant, or incorrect? I’d love to hear.

(Updated 2013/11/25 to correct Freudian instances of ACIP instead of APIC!)

Disclosure

My travel, accommodation and meals on this trip were paid for by Tech Field Day who in turn I believe were paid by Cisco to organize an event around the launch. It should be made clear that I received no other compensation for attending this event, and there was no expectation or agreement that I will write about what I learn, nor are there any expectations in regard to any content I may publish as a result. I have free reign to write as much or little as I want, and express any opinions that I want; there is no ‘quid pro quo’ for these events, and that’s important to me.

Please read my general Disclosure Statement for more information.

Hi John, good article – the questions you raise are valid and interesting.

In terms of positioning ACI announcement did bring some confusion in to current DC portfolio of Cisco.

Few pennies from me:

1. Will ACI come down to Nexus 7K?

I think you are on to something here.

Probably not intended to be so right away. With Insieme developed outside Cisco, I doubt there were plans for that.

I mean there’s already Cat6500 to N9K migration guides http://www.cisco.com/en/US/prod/collateral/switches/ps9441/ps13386/guide-c07-730158.html

BUT – Along service modules another thing was never supposed to make it to Nexus 7K – MPLS.

So yeah – we might see ACI on 9K 🙂 It will probably involve all new Sup, Line Cards and perhaps a feature cut. ACI lite?

2. Or maybe we’ll see ACI-enabled 40G cards coming later?

Yup, I’d put my money on that. Probably the development of ACI 40G non-blocking card took longer than expected.

I’d love to speculate on ACI design options, especially around inter-DC scenarios. Or ACI intergation with OpenDaylight, so far all materials come in “will” be supported/developed notation.

Best wait for more info from Cisco though.

I’d love to hear more on your ongoing research into ACI. Not everyday someone who blogs is an actual customer rather that vendor head or worse – press. 🙂

But it’s best we wait for some official info from Cisco.

One more question I’d raise – how does ACI fit into OpenDaylight?

Hi Kanat,

Let me try to answer your questions.

1. “Will ACI come down to Nexus 7K”. Both N7K (N7700) and ASR9K platforms are positioned to extend fabric connectivity leveraging WAN technologies, such as MPLS and all its flavors. N7K (and N7700) by itself can also be used in other solutions, such as DFA.

2. “…the development of ACI 40G non-blocking card took longer than expected”. N9K is the foundation for Application Centric Infrastructure and it is being rolled out in a phased manner with hardware coming first (which you can run in standard mode) followed by application policy model, the ACI. There is no delay in ACI 40Gb line card.

3. “I’d love to speculate on ACI design options, especially around inter-DC scenarios”. No need to speculate 🙂 As we get closer to ACI release date, all your questions will be answered. There are several deployment models you can follow based on your policy domain requirements.

I hope this is helpful.

Thank you,

David

@davidklebanov

P.S. I work for Cisco.

Greetings David,

“Both N7K (N7700) and ASR9K platforms are positioned to extend fabric connectivity leveraging WAN technologies, such as MPLS and all its flavors. N7K (and N7700) by itself can also be used in other solutions, such as DFA.”

so that’s a definite no-no, right? 🙂 I was there when BU guys swore on blood that SM will never make it to Nexus )

“N9K is the foundation for Application Centric Infrastructure and it is being rolled out in a phased manner with hardware coming first (which you can run in standard mode) followed by application policy model, the ACI. There is no delay in ACI 40Gb line card.”

I don’t see a rational behind forcing a customer to fork-lift upgrade if we wants both high density 40G and ACI. Then again I don’t expect Cisco SE to go against the party line in open forum 🙂

I’m former Cisco myself. So I know the game 🙂

I think ACI is a good concept and it will find it’s customer. Networking is a box-hugging culture and it will take more than few years to change that.

But the portfolio fragmentation and lack of roadmap clarity is a valid customer concern. Especially with everyone beating the SDN drums in their ears.

I hope that most questions around ACI will be answered in near future.

Cheers.

Hi John,

Meanwhile, the “benefits of ACI” can be obtained today in a software based solution (eg. VMware NSX). So that’s something folks will need to reconcile as they contemplate all of the concerns you’ve raised here. A software solution such as NSX will work on current Nexus 7K/5K/2K investments, as well as the Nexus 9000, with the currently available 40G linecards, running in the currently available NXOS operating mode.

Cheers,

Brad

disclosure: I’m an employee of VMware NSBU

Hello my friend,

While NSX offers some interesting day-0 provisioning advantages over existing networking models, it is taking drastically different path from ACI to achieve that. I will focus on just one here.

NSX takes a top down approach advocating the controller model for dissimilating reachability information, while ACI leverages the promise theory (http://www.cisco.com/en/US/solutions/collateral/ns340/ns517/ns224/ns945/white-paper-c11-730021.html). This is equivalent to your boss dictating you exactly how you need to perform your tasks (micro managing you) vs your boss giving you an assignment, which you can then complete leveraging your own skills. I will let you decide which model you prefer the best and which manager you would prefer to work for 🙂

Thanks,

David

@davidklebanov

So,

9 months later what do you think now ?

Is there a more cost effective 40G/10G custerable switch available ?