I was talking to a colleague this week about Facebook’s recent announcement of their “Wedge” open switch platform. The promise of this switch is pretty cool stuff, and we ended up having an interesting discussion about the premise of the Wedge switch and that led to a discussion of Pluribus Networks, who presented their products to us at Networking Field Day 7.

I was talking to a colleague this week about Facebook’s recent announcement of their “Wedge” open switch platform. The promise of this switch is pretty cool stuff, and we ended up having an interesting discussion about the premise of the Wedge switch and that led to a discussion of Pluribus Networks, who presented their products to us at Networking Field Day 7.

So with that in mind, let’s look at the Wedge switch as well as Pluribus, because the similarities are striking.

The Facebook Wedge Switch

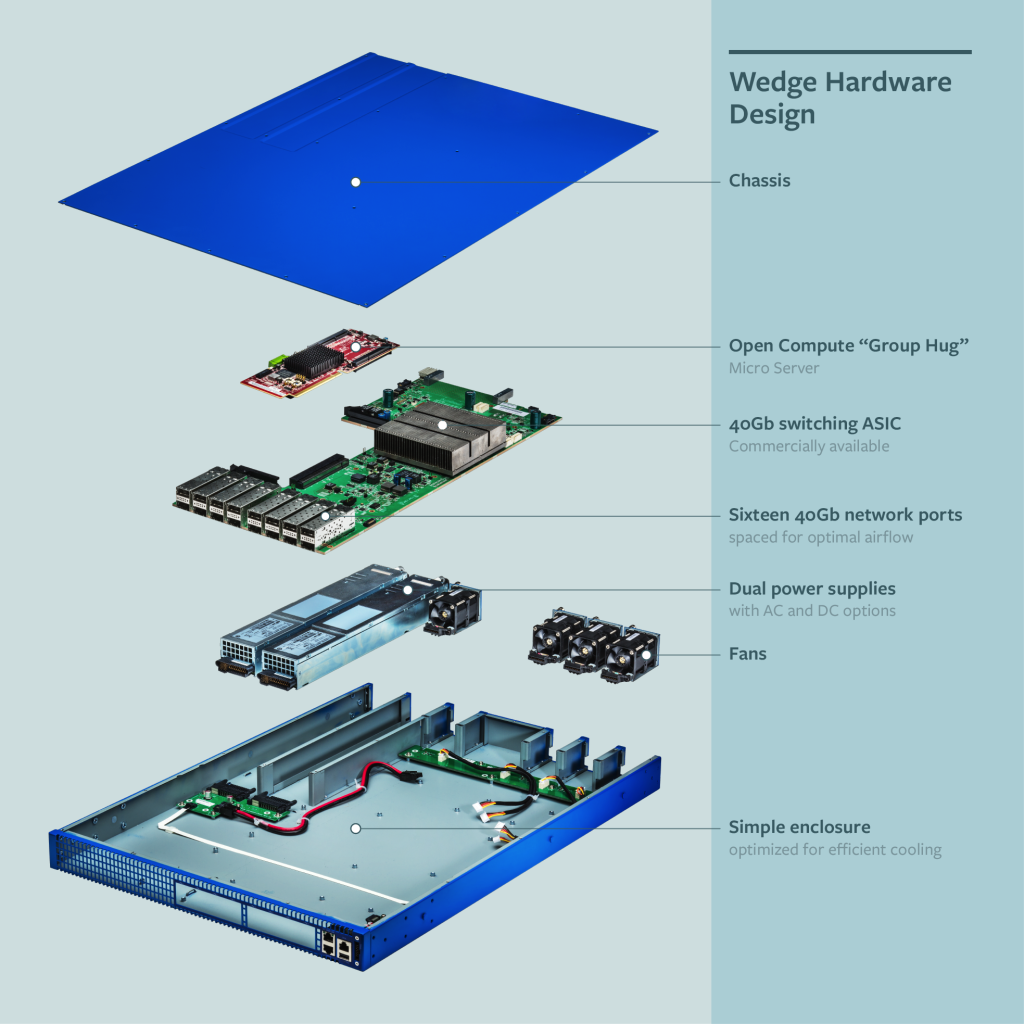

I’m going to borrow the architecture diagram from Facebook’s post about the switch so we have something to talk about:

So what do we have? First we have a controller – a micro server running a customized linux derivative called FBOSS. Then from an Ethernet perspective we have “Sixteen 40G network ports”, apparently – according to other reports – expandable to thirty-two 40G network ports, and those ports are designed to use breakout cables so each one provides 4 x 10Gb Ethernet connections. The diagram notes that it is “Commercially available” – in other words, it’s merchant silicon.

So what do we have? First we have a controller – a micro server running a customized linux derivative called FBOSS. Then from an Ethernet perspective we have “Sixteen 40G network ports”, apparently – according to other reports – expandable to thirty-two 40G network ports, and those ports are designed to use breakout cables so each one provides 4 x 10Gb Ethernet connections. The diagram notes that it is “Commercially available” – in other words, it’s merchant silicon.

I laughed when I read that, because unless it’s a tremendous coincidence, the Broadcom Trident II chipset supports up to 32 40Gb Ethernet ports. This is perhaps the most common chip we’re seeing in the marketplace right now – everybody is using it in their switching products because it’s a tremendously well-specified chip. But if everybody uses the same chip, then what’s the differentiator for all the switches out there? Answer: the software. Facebook is betting that their software running on that micro server will make the difference.

In other words, Facebook has taken merchant silicon and added a little linux server to it as an embedded controller.

Pluribus

Coincidentally, Pluribus Networks has a product that they term a “Server Switch™” which sounds remarkably similar; they use the Broadcom Trident II chipset, or an Intel Alta chipset (another similar merchant silicon solution) to provide Ethernet switching, then they embed a server in the same chassis to act as a controller.

The difference with Pluribus might be that they have taken the “server” part to new and interesting levels, and clearly see that function as way more than just a network controller, although that’s one of the functions it provides in their switches.

Pluribus see the market going a few ways:

- Box centric (e.g. white box, like Pica8, BigSwitch, Cumulus)

- Traditional vendors coming from the world of custom ASICs (e.g. Arista, Juniper, HP)

- Software Centric (e.g. VMWare, PLUMgrid)

- Hardware Centric (e.g. Cisco)

Pluribus sits rather neatly as a hardware and software solution. When I talked to my colleague, what became clear to me is that I need to go back and re-educate myself on why this is such a cool idea, because as we talked about the idea of merging a switch and a server into a Top of Rack (ToR) device, I realized that I couldn’t articulate what the benefit was, and how this was any better than just pushing a VM out to do the same thing.

The Freedom Server Switch™

Pluribus took a server platform with a NIC, and effectively replaced the regular server NIC with a Broadcom Trident II chip (or a similarly specified Intel chip). They add a Marvel Network Processor Unit (NPU) that talks to the switching chip, and the server itself is an ‘off the shelf’ dual socket Xeon CPU with up to 1TB of RAM. Yes that’s right, your ToR switch could have up to a Terabyte of RAM. The server side is designed to connect to peripheral devices, e.g. Fusion IO for high speed storage, and you can add a SAS card for JBOD (Just A Bunch of Disks) applications. There’s even a PTP (Precision Time Protocol) master clock card option, which means you could service PTP needs within each rack (actually a surprisingly useful thing to be able to do given the hop count limitations for PTP).

Pluribus took a server platform with a NIC, and effectively replaced the regular server NIC with a Broadcom Trident II chip (or a similarly specified Intel chip). They add a Marvel Network Processor Unit (NPU) that talks to the switching chip, and the server itself is an ‘off the shelf’ dual socket Xeon CPU with up to 1TB of RAM. Yes that’s right, your ToR switch could have up to a Terabyte of RAM. The server side is designed to connect to peripheral devices, e.g. Fusion IO for high speed storage, and you can add a SAS card for JBOD (Just A Bunch of Disks) applications. There’s even a PTP (Precision Time Protocol) master clock card option, which means you could service PTP needs within each rack (actually a surprisingly useful thing to be able to do given the hop count limitations for PTP).

Not only is the NIC replaced with the Broadcom Trident II, Pluribus decided that the standard architecture was insufficient for their needs. They don’t rely on any vendor-supplied software, but instead built their own software from scratch, which has allowed them to map the Broadcom ports directly into OS memory allowing up to a 60G CPU-to-switch communication channel. Compare that with a traditional switch model where the controller talks to the switch chip over a low speed channel. Pluribus believe that being able to control the switch at high speed natively within the OS is important, and rather than using a vSwitch for virtualization they offer a Network Hypervisor which can offer a virtual switch chip into the VMs.

Netvisor™ OS

With a Xeon-based server platform sitting there, the next decision is what OS to run on it? Pluribus made the decision to create their own platform yet still provide open access to the hardware. Enter the Netvisor™ OS. Netvisor is a distributed network operating system that also – say Pluribus – offers “bare-metal hardware programmability like a server OS, bare-metal hypervisor virtualization like a server OS, and hardware independence like a server OS”. To continue stealing their phrasing, “The Netvisor OS manages the L2/L3 network topology and it presents to the upstream L4-L7 applications a simplified, logical view of the fabric by abstracting and the physical network topology.” In other words, Netvisor virtualizes the network, and masks the actual complexity of the connectivity so that higher level applications can make requests without needing to understand the topology. We’ll see shortly how that can be useful. Netvisor also makes it easy to create isolated virtual networks (a vnet) including ports from anywhere in the fabric.

In case you’re thinking that Netvisor is all very well but you’re still stuck with Pluribus hardware, this is not so. Pluribus says that Netvisor can run on any platform as long as it is powered by an x86 CPU and an Intel (Alta) or Broadcom (Trident family) chip. Mind blown?

That Distributed Network Operating System

Managing a Pluribus network appears to be analogous to managing a virtual-chassis. All of the switches are considered to be part of the same network resource, but there is no central point of control – this is a distributed system after all. All the Pluribus nodes share the same view of the network (e.g. MAC, ARP, connections, IP flows). That’s handy because with every switch sharing ARP and MAC tables, you can suppress broadcasts over the network and answer them locally. The network is like a single device when it comes to managing it.

vFlow

This is odd. When I search Google for “Pluribus vflow” I really don’t get good results, yet vflow is a key component of the Pluribus solution. Maybe they forget to mention the product name on the website, or maybe the name has changed, but it’s weird not to find the Pluribus site as the top hit.

With that said, do you want to capture traffic? With a due sense of incredulity, we watched a demo where by logging into any switch in the network it was possible to initiate a packet capture (with appropriate filters) that was instantly applied fabric-wide. Using the ‘vflow-snoop’ command, the capture was displayed real time like tcpdump. I love that the capture can be initiated anywhere in the fabric, without having to figure out where the devices really sit. It beats the heck out of determining which optical tap to direct to a sniffer. Pluribus say that their lowest spec box (a 1U switch) can probably capture 700–800 Mbps, whereas the 2U switches can capture up to 10Gbps.

Interestingly, Pluribus also demonstrated connecting monitoring over the fabric. The “show connections” command allows you to easily find the big data users anywhere on the fabric, and it’s easy to slice and dice that data. This information of course is available not just through the CLI but also through APIs, because who doesn’t have a API these days? You can even look inside VXLAN and detect flows there too. It’s very impressive to have that level of analysis available right in the box, within your NOS, without any external analysis needed.

This fabric-wide visibility also extends to manipulation of flows (load balancing, re-routing, etc.) without needing to understand the actual infrastructure. Just program the change you want, as if it’s a single L3 device, and Netvisor can do the rest for you.

So What?

Ok, so let’s assume that Pluribus offers a very neat, distributed Network Operating System with some super-neat analysis tools. Why have those dual socket Xeons and storage connectivity options?

This is where the Server comes into Server Switch, and also where my conversation with my colleague faltered. I remembered that the Pluribus switch could be used in that ToR position as a localized DHCP, PXE, DNS server, as well performing SLB functions near the server. You can run VMs on the switch, by the way, so you don’t have to adopt a vendor-specific way of offering these services; instead you can just spin up a VM that offers the services you want, right there in the rack.

But what’s the killer app? What can the Pluribus solution do that some other solution can’t do? Why not run another network fabric then use the common virtualization solutions to provide services in each rack?

Well, perhaps there isn’t a killer app as such. That said, having a virtualized network, storage and compute platform as your ToR switch does allow you to deploy a big (even up to global) network fabric, and have Network Functions Virtualization (NFV) features embedded within the rack – and given the direct management of the Ethernet ports ad the virtualization of the fabric, this may offer some extensive capabilities pushed right out to the edge. In terms of reducing latency, pushing functions out to ToR could be a huge saving, and in low latency environments like trading platforms, that could be the edge that makes a trading platform more competitive. The reduction in network bandwidth by pushing some storage-intensive functions out to the edge could also be significant. Oracle and Pluribus certified Solaris 11 on the Freedom platform, so this is serious business.

Benefits

From what I can see, the biggest benefit of the Pluribus solution is the distributed switch fabric and the “always on” monitoring which can be tapped into across the entire fabric as easily as on a single switch. This is not intended to replace your regular compute resources; it’s simply a more intelligent device than the regular ToR switch – although there are some applications where using that ToR compute resource could well provide a real advantage.

Facebook’s solution is a little less clear so far, so it’s not clear whether that micro server will be usable in a similar way to Pluribus. It looks like the Wedge switch’s micro server is likely to be dedicated to a controller role and will not be of a class that can offer ToR compute services, let alone provide access to storage in the way that Pluribus can. But both platforms take merchant silicon and try to add something more to the pot. Pluribus seem to simply take that concept a bit further, but until we see more about “FBOSS” it’s going to be hard to know for sure. Somehow I doubt we’ll see FBOSS offering server virtualization in the top of rack.

Conclusions

Let’s put aside the Facebook switch for a moment as that looks like switch + controller. When it comes to Pluribus, yeah, I’m still looking for the justification for big compute in a ToR switch. Maybe highly functional NFV is it. DHCP and PXE boot services? I can do that without a major problem already on the compute platform. VMs? Got it already. For switching services, Pluribus seems very cool indeed. The analytics potential is huge, and the ability to slice and dice network data and captures as if everything runs on a single switch is huge and could offer significant operational savings to many companies.

Networking Field Day 7

Pluribus can do a much better job than I of explaining their value proposition and feature set, so here are the videos of their sessions from NFD7. These are worth watching for the Netvisor features alone, and if you have time I do recommend you watch all of them. It’s a really interesting proposition with potential uses I suspect will be created by the users rather than dictated by Pluribus. After all, the platform’s there for you to use; you decide how to use it.

Pluribus Netvisor Analytics Application

Pluribus Netvisor Deep Dive and Control Plane Demo

Pluribus Networks Background, Market Opportunity, and Key Differentiation

Pluribus Networks Customer Case Studies

Pluribus Server Switch and Netvisor Technology Overview

Pluribus vFlow and SDN Demo

Disclosures

Pluribus was a paid presenter at Networking Field Day 7, and while I received no compensation for my attendance at this event, my travel, accommodation and meals were paid for by Tech Field Day. I was explicitly not required or obligated to blog, tweet, or otherwise write about or endorse the sponsors, but if I choose to do so I am free to give my honest opinions about the vendors and their products, whether positive or negative.

Please see my Disclosures page for more information.

Leave a Reply