There’s a lot of talk at the moment about the evils of layer 2 networking, and most of the vendors are suggesting that they have the magic bullet that will remove layer 2 from you life, or at the very least will manage to hide or manipulate it sufficiently that you need never consider Spanning Tree Protocol ever again. I’ve touched on it a little on this blog, with a quick look at some of the options, and a drill down to TRILL / Cisco’s FabricPath.

Reading all of this made me reminisce slightly about how STP has impacted me in the past, and a few memories came to mind that I thought I’d share with you for comedy value – and perhaps sympathy.

Company A – National WAN

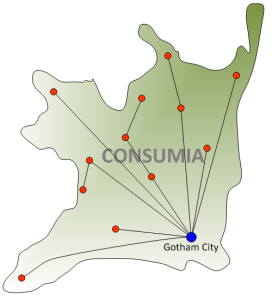

This company were great to work for – the network team were so laid back the company had to pad the floor. For the most part things ran well, which is probably why it was feasible to take things so easy, and under those circumstances, I’m not going to complain as it made for a very friendly and pleasant working environment with some really great team mates. Still, the network did have some fundamental problems which I can outline in this simple diagram:

As you can see, Company A’s network spanned the entire country of Consumia, centered on their main data center in Gotham City; a typical hub and spoke design, albeit with a relatively low bandwidth WAN given that this was over 10 years ago. Wait, did I mention that they ran DECNet Phase IV and had DECNet Nodes on most sites? I probably should have done, as they were bridging everywhere – and that meant they were running Spanning Tree Protocol over their entire network; the whole country was a single STP domain. On the up side, it’s a hub and spoke without any loops, and if you were forced to do this now, you could probably make some assumptions and filter BPDUs over the non-meshed WAN links so you could force some artificial limits on STP and keep it within each site. Of course, that would require us to have a bpdufilter command on their spanking new Cisco Catalyst 5000 switches, and that’s a relatively recent command. As a side note, I tried to find out when bpdufilter was introduced to IOS to back this up, and while I failed (I didn’t look for long), I did bump into this post from Brad Reese in 2008 saying that you couldn’t disable STP per port – so it’s within the last few years.

The point of all is this is to say that running one big fat STP domain country-wide absolutely sucks. A server reboot in Spankletown meant Topology Change Notifications (TCN) flying over the network, and the inevitable flooding of traffic – across the low bandwidth WAN – as the CAM tables were forced to age out after 15 seconds.

This was also the architecture that first introduced me to the concept of what we now call unicast flooding. At the time, Cisco TAC really didn’t understand why on some switches we were seeing periodic spikes in traffic on multiple user ports accompanied by dropped packets all over the place. The host ports were all 10MB half duplex, so any large amount of traffic hitting those would have a big impact. In the end after managing to grab a packet capture and watching the CAM tables like a hawk, I figured out what was happening – a gateway IP was being dropped from the CAM tables, and all the WAN-bound traffic got flooded, killing the host ports quite badly. Response from TAC: “I guess that sounds like a plausible explanation for the behavior, though I’ve not seen that before.” Helpful. I wrote a white paper on it and for a brief moment I was on the cutting edge of network cock ups (although I sincerely doubt I was alone in discovering or documenting it). Either way, about a year later, Cisco had a nice name for it (unicast flooding) and a web page discussing it. Yay.

Another side note: At this point in time – and it kind of scares me how recently this was the case – TCP/IP was still relatively new to the company – they ran it, but few people understood subnetting and addressing (and that’s one of the reasons I was there). Company A had been advised by a contractor (prior to my arrival there) that they should use the 128.0.0.0/8 IP address range for all their loopbacks, and they had faithfully done so. I still don’t understand the advice. What I do know is that it meant that users couldn’t access any of the web sites that seemed to sit in that space, which appeared to include a lot of .edu sites. Bizarre.

Company B – Very Large Data Centers

I won’t identify the industry or country, but these guys were happily running between 400-800 VLANs on their various Cisco Catalyst 6500s. The Aggregation/Core all had SUP720s, but the older access layer switches as I recall had Sup 2s. Holy crap, when you lost a trunk link and STP recalculated, it was something special to see how long it took the poor old Sup 2s to work their way through recalculating that many VLANs at the same time. Sadly, they often couldn’t keep up, and while the rest of the devices had stabilized they’d finally catch up on processing the BPDUs and trigger another calculation. Ever seen a network implode? Planned changes had to be done very carefully indeed, working on smaller groups of VLANs at any one time. Yes, we did remove this problem in the end 🙂

Company C – Highly Resilient Network

This company had a highly resilient network – everything in pairs, everything meshed, and VLANs trunked all over the place. Trunking VLANs through your Core, for example, is not a great design choice, but I get it – it was kind of necessary given the applications in use and the rapid organic growth (code word for ‘chaotically fast expansion’) of the network and services. Sometimes the divergence of logical topology and available data center racks leaves you with some insoluble opportunities.

James Hacker: May I remind the Secretary of State for Defence that every problem is also an opportunity?

Several Ministers: Hear, hear!

Sir Humphrey Appleby: I think that the Secretary of State for Defence fears that this may create some insoluble opportunities.— “Yes, Prime Minister”, episode #2.1 “Man Overboard”

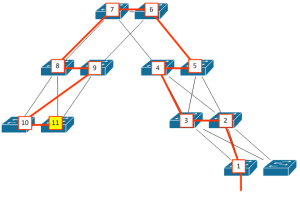

One of the issues faced as a result was how quickly you hit the spanning tree diameter for which the default timers are intended (IEEE recommends a maximum diameter of seven). So here’s a logical representation of a typical ‘resilient’ VLAN – a given VLAN would be present on all devices and trunked in all directions for maximum resiliency:

To calculate the ‘diameter’ of a network, you figure out the worst-case path to get from one switch to another switch without crossing any switch more than once; you count the first switch as #1, and count through to – and including – the last switch in the path. Here’s a potential ‘worst’ case path for the network above where for convenience I’ve done the counting too:

Yep, that’s eleven hops. Even if we removed the lower right pair of switches, it’s still 10. Cisco offers some tuning options (the ‘diameter’ command) that can automatically tweak STP timers for you. In a smaller diameter network you could reduce the diameter for faster convergence; in a larger diameter network like this one, you can increase the diameter with a side effect of slower convergence. In other words, you make the protocol more reliable by having to increase the length of time the network is not functioning properly. Awesome choice to have to make! Ultimately, it’s better to try to reduce the diameter – which is what we ended up doing.

Today’s Challenges

It’s a pain having so many competing choices out there to eliminate layer 2 problems. On the other hand, thank goodness we have all these options to choose from, both in terms of solutions to eliminate STP and in terms of the knobs we have to tune STP’s behavior and make it work better in more situations. Think back to when we didn’t have Rapid STP, or portfast, or bpdufilter and more. I think I’d rather be trying to solve problems with too many options than too few.

Remind me I said that in a year, would you? 🙂

Leave a Reply