As a consultant, I have had the pleasure of working for a large number of companies in a range of industries, and one of the things that interests me in all of them is seeing the varied approaches to network changes, from the initial determination of what change needs to be made, following the change through some kind of Change Control process (in most cases), through to execution on the production network. The one thing that’s consistent with every company is that they aren’t consistent – they all have their own way of doing things, and while there’s some fundamental commonality between them, each is usually missing steps that I would consider important.

I’m not going to try to document a full change control process here (though of course, if you want to engage me to consult for your company, we can discuss that), but instead I present to you:

The Network Engineer’s Guide to Making Changes

A common sense approach to making supportable and repeatable

changes to your network.

Caveats

Before we get stuck in, let me make the following brief points:

- This is a long post, but please stick with it. I thought it better to put it all in one place than to split over multiple posts.

- Apply as necessary. What I mean is, use your common sense to determine which areas of this guide are really necessary for a given change. And while you’re doing that, remember that common sense isn’t as common as we’d all like it to be. 🙂 Personally, I err on the side of caution, but do your own risk assessment.

1. Background

I’ve made a lot of network changes over time. I wish I could tell you that every single one of them was successful – of course they were not – but I do have a pretty solid track record, all things considered. I’ve seen many approaches to planned change (i.e. changes that are scheduled, rather than those required to resolve an outage), and they typically fall somewhere between these two scenarios:

Chaotic

An engineer decides a change is needed and figures out in their head what commands are needed. They log into the core routers during peak hours and make the change without any requirement for notification or approval. Five hours later (after the business day has ended), a critical problem is discovered by a user at a remote site. After three hours of aimless troubleshooting by the support team, somebody finally happens to call the engineer that made the change earlier in the day and ask for help with the problem. The engineer realizes what has happened, plays dumb, pretends to troubleshoot, then magically manages to solve the problem and is the team’s hero for finding and fixing the problem so quickly! In their hurry to back the change out, the engineer has actually introduced a different problem which is discovered three hours later. This time, the engineer is able to restore the configuration to its former state, and the problems (as far as they know) as fixed. With the network problems finally resolved, everybody moves on. Two days later, the same thing happens again, and our hero once again is showered in adulation.

Process Driven

An engineer decides a change is needed. They work up a candidate configuration in the lab, then create a change script documenting the purpose of the change, the expected outcomes, a validated sequence of backout commands, a pre- and post-change test plan, a notification list, and a risk assessment. The script is submitted to a team of the company’s top network engineers for review and approval; once gained, the engineer submits a change request into the company’s Change system, and waits for the 17 required approvals to be completed. After 16 of the 17 approvals are in place, the change must go before the Change Advisory Board (CAB) who review the change in the context of the risk assessment, other scheduled changes, freezes and other significant events, and once satisfied the CAB provides the final approval for the change to go ahead at the specified time and date. Testers are lined up, and the change is executed as planned, outside business hours. A problem is detected by the testers, and after brief troubleshooting to determine the cause, the change is successfully rolled back. The following morning, the CAB meets again to review the outcome of the previous night’s changes, and request a root cause analysis of the change failure from the engineer who had authored the change. The report is reviewed, and once the CAB is satisfied that the error has been correctly identified and steps have been taken to ensure that it isn’t repeated, the engineer gets approval to update their script and repeat the review and change control process again so that a second attempt can be scheduled.

I’m guessing that elements of both these scenarios are familiar to most readers. I’m also going to take a stab in the dark here and say that many people reading the second scenario raised their eyebrows about the constraints of the process described, and the inevitable delays and paperwork that it must introduce; I believe the standard war cry is that “the process gets in the way of actually doing my job.” And there’s a truth to that – process certainly can kill productivity. On the other hand, it can also add a layer of protection to the network that can help keep you in business. Whether the network is the service you provide to your customers (e.g. in a Service Provider) or your customers are internal (i.e. you maintain the internal corporate network), no customer likes to see unplanned service interruption, and depending on the nature of the business the actual cost of such outages can be surprisingly high.

Ok, so now you’re probably assuming that I’m going to tell you that the Process Driven approach is the right one, and you don’t need to bother reading the rest of this. Not quite. I’ll tell you that there’s value to it, but I’d also note that obsessively process-driven organizations can also be inflexible and slow moving – things that customers also do not like, when they need that change, or are waiting for a new product to be launched. There’s a compromise required for sure, but regardless of the process that surrounds you, as Engineers I believe there are things we can do that will improve our success rate and assist troubleshooting efforts. You can even help with – gasp – documentation along the way!

2. The Change Script

For me, the biggest thing an Engineer can do is to write a good change script. Yes, a script. It’s not cool to do things in your head – things get missed, errors get made, and then it’s very hard to evaluate what was changed.

TIP #1: Write your changes down, no matter how tedious it seems.

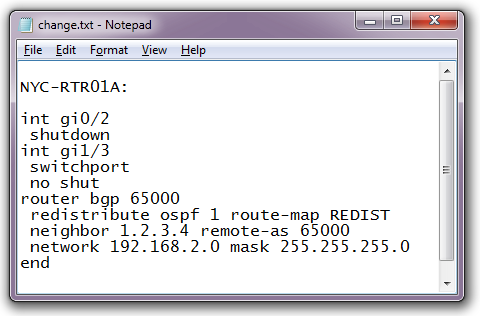

How you write them down will also make a huge difference. I would not consider the following, for example, to be a good change script:

I mean, yes, it might be syntactically correct, and congratulations for following TIP #1 and all that, but what does this change actually do? Many larger organizations separate the Engineering and Operations organizations, so this change script may be handed off to an Operations group to execute. How do they know that interface GigabitEthernet0/2 is the right interface to shut down? What’s being shut down? Should the BGP neighbor come up at this time? Should we be testing anything? It looks like some pretty major routing changes are going on. If something breaks, how do I back out?

Think I’m kidding? I’ve seen – and indeed, I’ve had to issue – scripts just like this to an Operations team – and amazingly they were executed without question. Looking back, the Ops folks must have had nerves of steel to take these contextless directives and put them on a production network. So with that in mind, even though there are only nine lines of configuration in this example, they scream out to me as needing some improvement, so let’s look at a couple of ways to make things better.

3. The Syntax

When it comes to change scripts, it’s important to note that there are two levels of ‘correctness’ in my eyes, and they are absolutely not the same thing:

Syntactically correct means that when you paste the commands in, the router doesn’t spew errors. This is the very basic requirement for a change script – the syntax should be correct!

Semantically correct means that the change does what you intended it to do and solves the problem you had. For this to work, the change will have to be syntactically correct of course, but if your syntactically correct change does the wrong thing, we didn’t fix anything (and possibly caused other problems).

TIP #2: Validate your syntax.

For syntax, it’s really simple – use lab equipment or an emulator (e.g. GNS3 / dynagen / dynamips, or the fabled IOU) to validate that the commands you have written down are correct. Try to ensure that the IOS version you are using to validate is similar to the version on the router you are configuring. There’s nothing so fun as executing a change only to discover that that command you were relying on doesn’t exist in the version of code running on the production router.

Basically, in my eyes there’s little excuse for syntax errors in scripts that are hitting a router.

4. Beware The Copy and Paste Monster

Especially in environments where changes are made to redundant pairs of devices, the temptation to copy and paste chunks of configuration in your script is strong – after all, the two routers are configured so similarly that you’ll likely be making the same change on both devices in a pair.

TIP #3: Don’t Copy and Paste.

That’s a bit of a black and white statement isn’t it? You can take this one with a grain of salt, BUT – just note that it’s very dangerous to copy and paste anything that involves interface IPs, OSPF network statements (if you use host wildcards), and so on. Copy and paste can be deadly unless you’re really diligent at identifying every reference that needs to change. Go carefully, my friends!

5. The Backout

How do we backout IOS scripts? We put “no” in front of every line! I’m kidding, obviously, yet I’ll bet we’ve all seen scripts that do this. To be absolutely fair, very often that’s sufficient so long as we avoid some of the existing structure. For example, in the change script above, the new BGP neighbor is added with these commands:

router bgp 65000 neighbor 1.2.3.4 remote-as 65000

To back that out, since (we assume) BGP 65000 already exists, we likely want to do:

router bgp 65000 no neighbor 1.2.3.4 remote-as 65000

That is, we don’t just put ‘no’ in front of both lines. This is simple stuff and I think most people can cope with doing this on the fly, although as I inferred earlier, I’m making the assumption here that bgp process 65000 already exists. Perhaps it didn’t – how would I know unless I ran some checks before making any changes?

So all’s good until you find a command that doesn’t work so nicely. We added OSPF to BGP redistribution with these commands:

router bgp 65000 redistribute ospf 1 route-map REDIST

Putting aside that the route-map is badly named, let’s back this out (picture that you’re under stress, it’s 3AM, and executive management is screaming for blood):

router bgp 65000 no redistribute ospf 1 route-map REDIST

All good? Not really. This particular ‘no’ command actually just removes the route-map from the redistribution, but leaves redistribution in place – now without any filters; things just got worse. What’s the lesson here?

TIP #4: Document – and validate – your backout script.

Use the same lab or emulator you used to validate the change syntax, and make sure that the configuration has been restored to its original state after you run the backout commands. You don’t want to be trying to figure out how to backout a 200 line script when you’re under pressure, and as we saw above, even a two line backout can cause chaos. There’s little worse than backing out a change that caused an outage only to find that you introduced a new problem in its place.

Backout scripts must be CLEARLY identified as backout, and differentiated from the actual change configuration. You don’t want a tired Ops engineer to run your commands, then plough on blindly and back them out again without thinking 🙂

So we’ve covered the basics – we got the script syntactically correct, and we added a validated backout. If this is as far as you read and you do this for every change script, you’re probably in the upper quartiles now. But wait, there’s more! Why stop at syntax?

6. Change Simulation

For the record, I do not subscribe to the school of thought that says “if we simulate every change in the lab, we’ll never have an outage.” This only works well if you have a tiny network and your lab 100% matches production down to the cable, with production-mirrored syntax and appropriate route injections to simulate external peers. Even then, sod’s law says that the change you make relating to the external peers that you didn’t fully replicate will be the one that takes down your network.

Back in the real world however, most networks are too big to effectively simulate. That said, I’m a big fan of trying to simulate what you can. I use GNS3 regularly when writing complex routing changes to ensure as best I can that the change behaves the way I expect it to, and that the downstream impact is as expected. I might only be modeling 4-6 routers rather than the 20 that are impacted, but I do try my best to at least minimize the likelihood that I’m just dead wrong about something.

TIP #5: Simulate whatever seems sensible in order to validate your change.

You can give yourself permission to skip this step for access port turn-ups, ok? If you’re really good, that is.

7. Semantic Validation

Or: Are You Actually Doing The Right Thing?

As I mentioned earlier, while correct syntax is essential, it’s no good if the changes you make aren’t actually going to have the end result that you had anticipated. Of course, if you knew it wouldn’t have the right result. you would have already done it a different way. From this you can conclude that the worst person to sanity check a script is you yourself.

You should of course check your own work for syntax, but when it comes to getting a grip on whether your change will work properly, you need somebody else to review your change and assess whether or not it’s the right approach, and to evaluate whether or not you got it right.

TIP #6: Always get a peer (or better) review. In my opinion, this should be mandatory for ALL changes, no matter how small.

Any review is better than no review, but obviously the better qualified the reviewer, and the better they know the environment in which the change is being made, the more valuable and reliable the review is likely to be. Most people are pretty amazed (and embarrassed) at how many errors a good peer review can reveal. The reviewer should be logging into the devices and validating the existing configuration and checking that your assumptions are correct. They should use their experience on the network to assess how your change will affect other devices in the network, which leads neatly into:

8. Downstream Impact

Often forgotten when writing a change on a device is what the impact is of the change on other devices – i.e. those downstream of your change. For example, let’s assume that you need to change the OSPF reference bandwidth on a router because it’s making a suboptimal decision about which interface to use for a particular destination. The change in itself may work locally on the router, but how will it impact other routers in the same OSPF area? Will their view of the network now change for the worse? Have you thought it through?

Another example may be that somebody looks at BGP and sees that for some reason, three BGP NLRI are being generated on a particular device:

192.168.0.0/16 192.168.0.0/17 192.168.128.0/17

The engineer decides that this is wasteful, and removes the two /17 networks from BGP. Locally this may not make much difference. Downstream however, traffic on the two WAN (links which were using the /17 routes to distribute traffic) is now all going over a single link and the link is almost at saturation point. Nothing bad will show up on the device where the change is made – it’s all downstream.

TIP #7: Think about the effect on downstream devices!

Between the engineer authoring the script and the reviewer, attention to the knock on effect of a change is critical. If you have a configuration archive and you’re playing with routing, why not search the archive for instances of the routes you’re manipulating? What you find may save your bacon.

9. Current Configuration

When you’re writing a change, you typically look at the current configuration and then create your script based on that. What happens if somebody changes the configuration between now and then? When the outage occurs, you’re left saying “Uh, but when I wrote the script, I’m sure that command wasn’t there. I checked!” Can you prove it?

TIP #8: Include dated configuration snippets in your change script.

Grab the existing configuration and paste the relevant parts into your script (and note the date on which you captured the configuration) to back up your change. This has a few benefits:

- If somebody changes the config, you have proof that you weren’t imagining things when you wrote your script;

- You can validate the router configuration against the snippets to ensure that there has been no change, before the script is executed.

- You provide the reviewer of your script with contextual information that will help them do their job better, and also save them time because they don’t have to log into the router and look for the configuration themselves;

- It provides a handy historical mini-snapshot of how things were on that date. When you look back, it will help you understand why you made the decisions you made in terms of the configuration. Remember I mentioned how this would help you document the network? Stick with it, there’s more in this vein.

I should note that when you paste in configuration from the router, you really need to make sure that it is clearly identified as current configuration, and not as configuration to be entered into the router again.

If you’re using notepad the easiest way to prevent errors is to precede every non-executable line with a ! so that even if config is copied and pasted by mistake, only the lines without a ! are executed; the rest are ignored as comments. I use this trick, for example, to allow me to confirm interface descriptions and explicitly call out existing config when I’m making a change, e.g.:

!Change interface IP facing NYC-RTR02A: ! interface GigabitEthernet0/1 ! description L3 link to NYC-RTR02A ! ip address 192.168.250.3 255.255.255.0 ip address 192.168.250.1 255.255.255.0

I actually also use colors and other formatting to make the executable change more obvious compared to the configuration I grabbed from the router itself, and I haven’t replicated that entirely here. However, what you can see is that the existing IP is .3, we see the interface description copied from the router, confirming that this is the correct interface (we hope!), then we see the actual change. This is quite effective, I promise! You’ll note that I used the ! to make a comment about the change as well; here’s another example:

! Change static route to point to 1.2.3.4 instead of 2.3.4.5 ! ! Existing config (2011-12-07): ! ip route 1.1.1.1 255.255.255.255 1.2.3.4 ! ! Add new route ip route 1.1.1.1 255.255.255.255 2.3.4.5 name Force_DBHost_via_R01 ! ! Remove old route no ip route 1.1.1.1 255.255.255.255 1.2.3.4 ! end

In theory you could cut and paste that whole lot into a router and only the desired changes would be made. It’s more typing than if you just put in the two commands – but then, isn’t this a lot clearer? From this excerpt we still don’t know why we’re changing the next hop, nor what those next hops are – that’s something that should also be in the change script, so let’s talk about:

10. Problem and Purpose

I believe that every change script to be executed in a data center should begin with a problem statement, even if the script is just for a port turn up. For a basic change like the port turn up, it doesn’t need to be complex – the purpose could be to “enable the ports for a server <y> to support the <x> service web interface”, or something like that. For a more complex change, the problem statement should explain the current status/architecture, what needs to be done, how you intend to achieve the change, and what the end status should be.

Tip #9: Always include a section that defines the script’s purpose.

The explanation you write may end up being one line or ten pages. Again, I can sense eyebrows being raised because that’s a pain to do. Yes, it can be – but doing it, as I see it, has six major benefits:

- Writing it out forces you to think through what you’re planning to do. Often, the very act of spelling out the problem and your solution along with some diagrams to demonstrate the change is enough to make you realize why something isn’t going to work the way you thought, or perhaps to alert you to a downstream issue that you hadn’t previously thought of.

- By documenting the purpose, you make the reviewer’s job easier and make the review more accurate as well, because they now understand the semantics of what you are attempting. Without a true understanding of purpose, the reviewer is just checking syntax, which is really only half the job.

- By writing out what you’re doing, you are creating an archive of network change documentation that you can store and search at a later date. When you wonder why something was implemented in a particular way, you can go back to this change script, read the explanation and understand what problem was being solved, and see why the particular solution was chosen.

- You give the group that actually executes the script (if it’s not you) a clear understanding of what the script does, which means that they are (a) more likely to approve it in CAB, and (b) more likely to detect any problems that occur, based on the fact that they have comprehension of the task at hand. This is often undervalued, but you get far fewer calls in the middle of the night if you empower the Operations engineer by giving them information.

- You provide a model for future changes of the same type, and a way to on-board new team members. This whole ‘learning by osmosis’ thing is way overrated I think. What better way for the new or junior team member to learn than to read recent change scripts and see how things are done, and understand how the network functions?

- Your documentation will make troubleshooting easier if any problems arise in the hours and days following your change. Operations can read what was done, troubleshoot it for you, and determine if the change needs to be backed out – all without calling you.

This is a big step up from just typing up a list of commands, and I believe – along with backout scripts – is one of the major things missing from many organization’s change requirements. When you look at the six benefits I listed above, doesn’t it make sense to do it? The level of understanding you can impart even in a couple of paragraphs ultimately reduces outages because it empowers everybody to do their jobs better and understand the network better.

11.Project or Service

What project or service is your change for? Just a quick mention of it will make the script searchable later!

TIP #10: State what project or service your change supports.

12. Don’t Include ‘conf t’

I see this a lot – scripts that include ‘conf t’ at the top. My advice is: don’t do that. If you must, put a comment saying “enter configuration mode”. After all, if either you or your Operations engineer doesn’t know to enter configuration mode before entering configuration commands, you have bigger problems on the horizon.

Critically though, I’ve seen it happen multiple times that an engineer is working on a configuration script, logging in to routers to validate information, and copying and pasting chunks of their script around – and one misplaced right click in a PuTTY window (or any other terminal client that supports right-click paste) dumps the paste buffer into a production router. Avoiding ‘conf t’ means it’s never in your paste buffer, and your accidental commands will be rejected and you should still have a job at the end of the day.

TIP #11: Don’t put ‘conf t’ in your scripts

13. Test, Test, Test!

It’s amazing how many changes I have seen being made without even basic testing steps being undertaken. If you add a BGP neighbor, why not check if it came up? Is it receiving routes? If you enable an interface, why not check it came up?

TIP #12: Test before and after, compare results, specify what the result should be.

Testing takes many forms of course – some can be done on the routers, and some will be service-based and require the platform owners to participate. Testing should be specified in your change script, not in your head. If you’re making routing changes, it’s probably also worth having a basic set of standard tests to ensure that everything else still works; it’s easy to test only the service you’re making the change for, and forget the routing affects downstream devices and you might have just killed another service. Use your NOC or network management team if you have one, and get them to check alarms and service metrics for any anomalies.

The tip instructs you to specify what the result should be – this is really important. There’s no point saying:

TESTING ping 1.2.3.4 traceroute 1.1.1.1 sh ip route 1.2.3.4

Is 1.2.3.4 supposed to respond to that ping? What am I expecting to see in the traceroute? What are you expecting to see in the output show ip route command? What are the SUCCESS criteria for these tests? If you don’t specify what counts as success, the testing is worthless. If possible, simulate actual command output – late at night (when many changes occur) it really helps to compare like for like.

14. Baseline Before/After

Protect yourself by baselining the devices you are changing – grab a copy of the running config. Check device cpu is normal. Check the logging buffer (or syslog servers) to see if the device has a problem before you start the change. This helps eliminate false errors after the change. I’ll wager that most readers have made a change then had somebody say “Oh my goodness, the even log is going crazy, back out, back out!” And then you realize that the event log was always crazy… Repeat the same commands before and after your change, and log the output. Always log the output… 🙂

TIP #13: Baseline your devices before and after your change.

15. Document Information

When you create a change script, always include a title, your name and contact details, the date, the date config snippets were gathered, and so on. You may also want to include details like whether the commands were lab-tested, and who reviewed/approved the change script.

TIP #14: Include basic info in your scripts, including author details.

This info takes very little time to add, but again will pay you back in the future when you need to find a script, or find out who made a particular change.

16. Document Format

It is probably evident from the tips above that Notepad ain’t gonna cut it. I don’t care what your favorite tool is for knocking up configurations – we all have our preferences I’m sure – but ultimately, I’ve found that delivering documentation in a format that’s visually attractive and allows you to format the text as well as to include diagrams is a big step forward. To that end, I generally suggest using something like Microsoft Word for change scripts (but for goodness sake, please format configuration as Courier New or similar – it’s what the eye expects). I have previously created templates with pre-defined styles for configuration, headers, comments, config snippets, and so forth so that formatting text is simple.

TIP #15: Deliver change scripts in Word format

If you use document management systems like SharePoint, you also have the ability to edit and version-control Word documents in an integrated fashion, and SharePoint indexes Word document contents as well, so you can search your archive. In fact, I’ve previously used SharePoint for the change review / approval process as well, providing both a record of the approval process and a searchable archive of all changes made since the site was set up.

17. Use Change Script Templates

Now you’re started using Word, create a template with standard styles for each type of information, and a framework for all the information that’s expected in the script. Now mandate that the whole team use it. It’s actually quite refreshing to have every script look and feel the same way, and makes reviewing, reading and executing scripts much easier because everybody knows where to look for information, they know what the changes look like that should be pasted into a router.

TIP #16: Use a change script template

18. Date and Version Control

Every script – and its filename – should have a version number and date. I’ve seen way too often confusion on a change window when it’s realized (usually half way through) that people are working from a different cut of the change script.

TIP #17: Add Date and Version to all scripts, and keep it updated.

Putting It All Together

It’s not as bad as the previous 4,500 words might make it seem. Here are the tips all together in a handy list:

- Write your changes down, no matter how tedious it seems.

- Validate your syntax.

- Don’t Copy and Paste.

- Document – and validate – your backout script.

- Simulate whatever seems sensible in order to validate your change.

- Always get a peer (or better) review.

- Think about the effect on downstream devices!

- Include dated configuration snippets in your change script.

- Always include a section that defines the script’s purpose.

- State what project or service your change supports.

- Don’t put ‘conf t’ in your scripts

- Test before and after, compare results, specify what the result should be.

- Baseline your devices before and after your change.

- Include basic info in your scripts, including author details.

- Deliver change scripts in Word format

- Use a change script template

- Add Date and Version to all scripts, and keep it updated.

If you create a good change script template, much of the work is done for you. The template I usually use contains all the styles necessary to format the document consistently, then has the following sections, each starting on their own page. Sections can be removed if not needed, or just marked as not required:

- Document Info page, containing:

- Title

- Script version and date

- Author (and contact info)

- Work request number (the request for me to do the work)

- Purpose (1 line summary)

- Priority / Criticality

- Project Manager

- Impact of delaying execution

- Date of Configuration Snapshots

- Table of Contents

- Checklist

- Is the change service impacting?

- Has it been lab tested?

- Is other support required?

- Is testing required outside what’s in the script?

- Is the change on production devices?

- Dependencies & Pre-Requisites

- Document any pre-change requirements, e.g. does another change have to be executed before this one will work?

- Detailed Background / Purpose / Requirements, if required

- Pre-Change Testing

- Includes standard set of test commands for IOS, NXOS, ScreenOS, Junos OS

- KPI and Alarm/Event Log Checks for NOC or NMS

- A boilerplate guide to the document styles that are used

- Cable matrix (if applicable)

- IP matrix (if applicable)

- Device configuration

- Each device to be configured starts on a new page with the device name as the title for the section (it also auto-populates into the header so that when there are multiple pages of changes for a device, it’s easy to reconfirm what device you are in the middle of configuring;

- Testing commands and output for each specific device is included inline as required;

- Config snippets for each device are included either at the start of a section, or individual lines are included on the way through

- Post-Change Testing – tests to be carried out after all changes are completed

- Backout scripts (usually in reverse order to the implementation scripts above)

- Post-Backout Testing

If I didn’t feel the eyebrows raising before, I know they’re up right now. It looks huge doesn’t it? But here’s the thing – most of this information is already in the template. I just fill in some gaps. Sections that are likely to be optional are, by default, marked as “NOT REQUIRED” or similar in the template – so if I don’t need them, I have no change to make. All I really have to do is to explain what the change is, write the change, and go from there. Every script looks the same; it’s clear, consistently formatted, and there’s enough information in there to sink a bus. And for archival purposes, it’s awesome – the information in a change script of this type is like gold when you need to look back and understand a change.

There’s more I could say, but I have to stop somewhere and this is a good grounding at least. I hope this has given you something to think about; or maybe you have something you can teach me too.

My name is John Herbert, and I approve this message. Happy scripting!

I’d love to hear how you do your change scripts. What did I miss? Is there anything here you’re going to start doing from now on? Do you agree, or is this just too much for you? Throw in your opinions below!

I’ll add another thought while I remember – kind of a TIP #9B: In addition to configuration snippets, I also include show command output (and similar) if it helps explain the current status of a device. For example, if I’m playing with etherchannels, it may be useful to show the current status of the etherchannel before I add a couple of members, so that when testing after the commands have been put in, I know how many members I should be seeing, and so forth. Again, this helps significantly if somebody changes something between when you wrote the script and when it get executed.

I really like the idea of dating the existing config snippets that are referenced in the script. I’ve always included those, but not dated them and sometimes I build a script weeks before a change. Dating that section will remind me to go revalidate again.

I typically use a plain text file for convenience across platforms, ability to paste it right into an email, etc., but I still include a comment block at the top with customer info, the device to be changed (and the mgmt IP it should be accessed on), functional description, project code or ticket number, etc.

For highly templated changes or config templates, I tend to organize the commands into relevant sections and block them out with big obvious comment tags like:

!#############################!

!#### Remote Access VPN - Begin ####!

!#############################!

command 1

command 2

command 3

!#############################!

!#### Remote Access VPN - END ####!

!#############################!

That makes it really easy to remove that entire config section from my template if it’s not needed for this particular event.

My other tip is when doing a complex change script: I try to format my scripts just like the IOS config output will look with proper indents, mostly complete commands for anything non-trivial, etc. It makes it easier on my eyes to read through it and complete commands make it more obvious what is going on. I prefer:

interface serial0/0

encapsulation frame

ip address 1.1.1.2 255.255.255.252

frame map ip 1.1.1.1 201

no shut

exit

ip route 0.0.0.0 0.0.0.0 1.1.1.1

to this:

int s0/0

enc fr

ip add 1.1.1.2 255.255.255.252

fr m ip 1.1.1.1 201

no s

ip ro 0.0.0.0 0.0.0.0 1.1.1.1

Lastly, as shown above I usually include “exit” statements from config sub-levels when appropriate (and borne out in testing/validation) because every so often a command does not work correctly if you’re in the wrong sub-mode, or if you abbreviated the command too much a command that works in (config) mode may be ambiguous when you’re buried in (config-subif) or (config-pmap) mode.

And of course my indents didn’t make it through the HTML even in code mode, but you get the idea….

I fixed it for yas.

Indenting. YES. I am 100% with you on that, and do exactly the same. It does make the code significantly easier to read! I also usually type the entire command out – otherwise it makes the reviewer’s life unreasonably difficult to have to decode the short hands. Both great points I wish I’d remembered to include, but now they’re here so it’s all good!

Exits are good so long as you’re really careful with them – that is, when you’re editing your script you don’t leave any bonus ‘exit’ statement in and get surprised as you are logged out 🙂

I’ll use a text file if I have to, but I’ve found that it gets challenging to present the information as clearly as I would like, especially in a long script. And the ability to include a good diagrams really helps. So I still vote Word, but as with all these tips, it’s all about your environment. Most companies have a standard build that includes an office suite, so I abuse the one that they have 🙂

p.s. You can indent using even within a code block it seems. (Just tested)

Great article, thanks for sharing.

#12 — Been there, done that. I don’t include ‘conf t’ anymore for *exactly* this reason.

Learned this lesson while prepping a change once. I was “mousing” to the right, and bumped the mouse into my coffee cup, unloading my paste buffer into an unlucky device 🙁

Yeah, it hurts doesn’t it?

I’ve seen people saved before by AAA denying configuration access (thank goodness), but knowing that only half the routers in that particular data center would have denied the commands rather took the edge off the delight… 🙂

I did this exactly last week. Re-IPed an ASA interface. Noticed my mistake, removed the interface, re-configured it, re-applied the access-list…. then noticed several hours later that a route disapeared at the same time….

Never again…

Thanks for the reminders/tips.

First of all, this is a great article – thanks, it must have taken a wee while to get all that down 😉

One thing I think you’re missing is directing the user of the change script to take a backup of the config (or not, in certain circumstances) before they go charging in to implement your changes. Equally important is to flag up at what point the config should be saved. Depending on the environment, it can sometimes be against policy to save before the changes have been stress tested in running-conifg for some time before saving to NVRAM.

One other thing to consider is automated tools – I’ve come across a number of larger enterprises where the change management process is beginning to be automated using scripts and other clever bits of software to determine whether all the commands entered in a change script are strictly necessary, or whether they’re already covered later on in the config. This is particularly relevant to firewall changes. The number of times I’ve seen rules in an enterprise firewall that are rendered redundant by an older rule a couple of lines down is staggering.

In terms of formatting the script, I try and treat the config script as if it’s a piece of code – in fact if you think about it, the config script is really just a bit of shell script. So all the rules about writing good code apply to your change scripts: comment lots, choose meaningful variable names, and document the hell out of whatever you’re doing.

Hi Neil. Step 14 says to baseline before/after, including grabbing the running-config, as well as checking other things like CPU utilization (it’s always nice to know those pre-existing conditions!). You’re right about config saves, although in my experience, if you hand off to an operations team they will ignore anything you tell them regarding saving, and will do it whenever they – or the policy they’re bound by – tells them to. In principle though, you make a good point – and so long as you as the author of the script are clear on what the operational policies are regarding saving, it would be useful to include that.

Agreed about formatting, although I’d add “and be consistent across the team”. It’s one thing to code by your own standards, but if everybody uses a different standard, it’s hell to have to read code where everybody contributed. My point about the use of a template (for styles as well as to standardize the organization and content) is to ensure that not only do you create your own scripts the same way every time, but that the whole team produces scripts the same way. That way if you’re on the Operations team receiving a script for execution, you get to see the every script presented the same way; you always know which bits are configuration intended to be put in the devices, you know what is backout, what’s commentary, and where the backout is located. It’s amazingly helpful.

Automated tools are are interesting category. There’s definitely a place – if you have the tools – for validating your configuration and ensuring there’s no duplication. The firewall rule example you give is a great use case for that kind of check, and similar would apply to ACL / prefix-lists, route-maps and so on. If you have appropriate rules set up you can also police the application of various standards and policies too. So yes, if you have the tool, use it!

For deployment purposes, I honestly am not so keen on automated tools, although I’ve also seen some larger companies trying to move in that direction. If you have a simple change to apply (say, updating SNMP community strings on 50 devices, or turning up an access port) then by all means fire away and let the machine do it; it’s still important that the script goes through the same review process before it’s executed though, and I get the feel from some organizations that the mind set shifts to “don’t worry, the software will catch any errors”. I think that’s a risky assumption, especially as the software that deploys the script may not be the same software that can analyze a script (other than at a pure syntactical level) – so you’d need both to even start having a level of confidence.

All software should (allgedly) catch syntax errors; some of them will catch logical errors (e.g. referencing a prefix-list that doesn’t exist), but generally speaking software is not good at catching semantic errors – and how could they be? If you run through a script to turn up an access port, software likely won’t catch that you entered the wrong port number. Really good software might look at the existing switch config and point out if the port you chose is already enabled, but if you chose another ‘shutdown’ port, how can it validate any further? There are some programs that try to model the network, and those are great because they can warn you of downstream routing changes, for example. I’ve started to see that kind of analysis being tried out to try to determine a script’s knock on effects as part of the script validation process, which is a helpful step. Obviously it’s separate from the choice of how to deploy that validated script. I’d never trust the software alone to check the script; run it through, then send it to a human with experience.

More complex changes in my experience inevitably require some careful sequencing – which will be the subject of another post due soon – where you’re not just blasting changes out, but rather, the change requires steps to be taken in order on multiple devices, with checks made in between to ensure that each step has had the desired effect. Automated deployment systems really are suboptimal for that kind of thing. So while the human factor is prone to introducing errors, I’d also argue that in many cases it’s an essential component of the change because you need sequencing and feedback.

Great comments, Neil, and thanks for taking the time to share your insights.

Sharepoint is not a version control tool. All scripts (as well as full configs) should be managed in a real version control tool like Subversion, Mercurial, or Git. Branching and merging are important operations to learn about, and they apply directly to network configuration as well as code. These tools also do a proper job of separating metadata from content with commit log information.

Interesting. SharePoint does provide basic versioning, which I’d say is sufficient for most change script development and editing. The fact that the checkout / checkin process is integrated into the MS Office suite just makes it easy for people to use. And at the end of the day, if you create a system that the average engineer finds complex, they’ll find ways to avoid using it. I’d agree that it’s far from being a fully fledged source control system, but then, I’ve never had a need for anything more than that.

Assuming I’ve been missing a useful tool, I’m interested to hear more about where you see the uses for the level of version control you recommend in the context of change scripts. I’m not immediately seeing the need for branching and merging – it’s not like you check out a configuration, make changes, and check it back in, or have multiple branches of a device’s configuration. Rather, you write a script that gets you to the planned end goal based on the current device configuration. To ‘merge’ those requires more than textual manipulation, it requires an understanding of the actual commands in order to merge them the way the router would do. I’m not sure I’ve heard of a system that does that and my immediate reaction, which I’m sure you can help refute, is that this is akin to using a sledgehammer to crack a nut.

That said, when I last used SharePoint for script approval, we used a List item, -which can’t use versioning – so we just maintained good issue numbering within the list item instead 🙂

I look forward to learning more – thank you!

Hmm… for some reason my reply didn’t show-up as related to yours post in a thread. See this comment for my reply.

Great Post. We don’t do most of the things you detailed above, but now I want to start. Would you be at all willing to share your word template that you use for your change scripts?

Since I used it at work that may not be possible :/

My advice is to create one that suits your (and your company’s) needs and your own sense of style. The outline above would certainly give you a basis for the shape of content in the document, and the only remaining thing is to create styles so that things look consistent. On that note, the only things that, in my opinion, have to be in non-proportional font (e.g. Courier New) would be the configuration and backout sections, so that they look as they do in a terminal window. I also made pasted configurations (snippets) look that way too. In my case, ‘configuration’ was blue courier new 9pt, pasted config was green courier new (all lines should start with ! of course), backout was some hideous eggplant color courier new (and appears in sections towards the end of the document). Basically, it’s all down to your own taste. Try it out and see what you like the look of. I know there were people that didn’t like my choice of colors and so on, but the important thing was that we all used the same horrible colors 🙂

Also, I created styles for testing that were like ‘blocks’ in the text – a light grey background differentiated the testing blocks at a glance from everything else. Be creative!

Almost forgot something important – your template should be in Landscape orientation. Some config lines and testing output are very long, and you don’t want to have to wrap lines if you can avoid it. It feels odd at first, but it rapidly makes sense.

If a network engineer cannot understand subversion, and use a very easy tool like TortoiseSVN, they shouldn’t be a network engineer. We have graphic designers that use it for heaven’s sake, and it really doesn’t slow you down. It actually really speeds you up in some cases (especially tracking down a “when did this exact line change” sort of issue).

The need for branching and merging is fairly obvious: You should have a QA branch (“trunk” in subversion speak) and a production branch. Changes are made and tested in the QA branch/lab, and then merged into production (with network or other changes made as part of the merge). This ensures that all changes flow through QA, and QA looks as close to production as possible. You only ever deploy from the producion branch in version control.

Branching and merging is also useful for similar devices: they are all branched from the same base configuration, and then changes that need to be applied to all of them can be merged across using the version control tools. Think of an ACL change that needs to be made to 80 switches at the same time.

The use case for a version control system with network devices is almost exactly like it is for SQL databases. That is, you want to:

-capture all changes, with metadata about who, when, and why

-be able to follow a history of all changes back to day one (never forget)

-mutate the state of a running system to a new state without data loss or downtime

-be able to restore all configuration and data to a known-good state rapidly and repeatably in case of device failure

– be able to rapidly see differences between any two versions of any configuration (this is what vcs tools are really good at) and track down when changes were introduced.

Developers and DBAs have developed a lot of practices in this area with source control tools. Basically, you start with a config dump as an initial point, and then append modifications to the bottom of the script, with comments, and a commented-out undo script. Every change is cumulative. It should always be “safe” to re-run the script in its entirety, or only just from a previous commit to the end of the configuration script.

When necessary, you can modify previous parts of the script to prevent bad interim states if the script were re-run in whole; this is usually done by commenting out or deleting those bad states from the script. The VCS keeps track of all of this change automatically.

We’ve been using Subversion with network device configurations for years, and it works beautifully. It is especially helpful for firewalls, which have a lot of state change, and each of those changes has a lot of metadata. We base a workflow around the VCS: the change “proposal” is the commit into the QA branch, and the peer-review and approval is based around the merge of that change into the production branch (Git makes sign-off an explicit feature). The commit of the merged changes into the production branch is done immediately after changes are deployed to production, so we get a timestamp and other metadata, plus any last-minute tweaks that may have been needed. Using the simple GUI diff tools, it is easy to pull just the delta, and run those only rather than re-run the whole script. In fact, we have deploy scripts that do this automatically with “svn diff” for some devices (mostly load balancers).

Finally, many trouble-ticketing systems, bug-trackers, and wiki tools have hooks into Subversion, Git, etc. There’s nothing nicer than having VCS changes related to a particular trouble ticket automatically linked into the ticketing system and vice-versa.

Ah ok, I see where you’re coming from. As a way to manage/store the proposed and executed changes, I can see that would work. How easy is it to manage a change that requires a series of configuration changes on multiple devices and keep the association between those changes so that if you need to back out one, the others are pulled up also?

Appreciate you sharing your opinions on this – it’s definitely an interesting read.

A pretty much perfect description of a fit for purpose network change control process – thanks.

I’ve half a mind to email a link to this post to my colleagues in the Engineering department of my organisation. I’m a bitter Operations engineer (Not for much longer I hope – 5 years is a fair stint at the networking coal face surely?) and have experience of almost all the things you talk about.

The worst part is when the process used is in theory pretty good but only superficially. So for planned changes there’s a word doc template used with description of change section and possibly diagrams of current and future layouts, then separate configuration and backout sections. So far so good.

But too often you realise the intro and description section refer to irrelevant devices or aims as it’s a previous changes finished document being rehashed to save a few lines of typing up on a fresh copy of the template. Then you start applying the script and commands are not taken or don’t work with the configuration present so clearly no simulation or verification has taken place. (My favourite anecdote here is having to explain to an “on paper” more senior engineer that no matter how many times their script tried to “no shut” that interface it wouldn’t come up as it was a member of a port channel that was shutdown. I ended up telling them to read the config doc for Port Channels on the Cisco website…)

Perfect example of a superficial process not being effective is I recently carried out a “Peer Reviewed” change where they hadn’t noticed they had got the names of the devices to make the changes on wrong! It doesn’t get more fundamental than that and left me feeling the whole process was a sham.

My point is your list is a perfect scheme to follow it’s just too many probably implement the easy to do points without diligence and consider it enough of a fig leaf to cover a multitude of sins.

Ahh I’ve ranted enough now, I’ll be the one shaking my head in the corner wishing I worked for you John!

Thanks, Steve.

As you note quite rightly, most systems – no matter how well planned and intentioned – can be scuppered by people who don’t think it’s important enough to follow the process.

I’ve certainly seen the same things you mention – people re-using old changes and only changing the bits that they can be bothered to change (I always encourage starting each script on a new, blank template of course). All you can do is to try and drive (incentivize, motivate … threaten(?) – whatever is required) the team to do things properly for their own, and the company’s, sake. Similarly, the reviewers can’t skimp on their time either, and need to be thorough when reviewing – as they are putting their name to it. At one of my clients, when there was an outage due to a bad script, both the author of the script AND the reviewer who signed off on it would be responsible for explaining what happened and how errors got through the review process. Sometimes it was just things that were hard to spot; other times, it was clear that the reviewer had cut corners. Review is not a process to be underestimated – when you sign off on something as correct, as I see it you are staking your reputation on that fact, not just rubber stamping something for the sake of it, and that responsibility should be treated seriously.

The flip side to this is that documenting and testing scripts properly, and reviewing scripts thoroughly, takes TIME. That in turn means that management – or whoever is watching the clock and counting the units of output – has to support this longer process and understand why it’s important, especially as the net effect may mean you end up needing more headcount to do the work. Less output per person -> more headcount -> more cost -> fewer outages. Do the math on the cost of network downtime, and the ‘fewer outages’ bit rapidly makes financial sense in most cases. This comes down to the old adage that you can have something fast, you can have it done cheap, or you can have it done right. At a push, you can probably have two out of three goals achieved, but you can pretty much never get all three. Managers: make your choice…

Thanks for the tips. I was just trying to be lazy 🙂 . It will be good for me to build the templates myself. I’ve got a potential project coming up where a thorough template like this will be helpful.

Shoot, That was supposed to be a response to John’s comment on December 13, 2011 at 11:15 am…

Excellent advise. I hope you dont mind me linking to this article on my blog.

Even though all of these points should be carried out, it rarely happens in the consulting business. Unfortunally.

Again, thanks!

Hey John,

Just discovered and bookmarked this article. Fantastic work. Apologies if I missed it, but I always have a step in the change procedure to verify console to the device being changed.

Console access can easily be checked as the change is being written and as part of technicians’s script immediately prior to pasting changes. I don’t know how many outage post-mortems I’ve attended where they say, “tried to fall back to console, but console wasn’t working”. Consoles are low-cost insurance.