When I saw that Mellanox was presenting at Networking Field Day 17, I was definitely curious. When I found out that I would in fact be watching a joint presentation by Mellanox, Cumulus Networks and Ixia, it is fair to consider my interest piqued. Why would these three companies present together?

It turns out that these three companies present quite a compelling story, both individually–as you would probably expect–but also when used in combination. This post looks at the role of Mellanox Ethernet switches in an Ethernet fabric.

Mellanox

To me, Mellanox has been one of those ‘behind the scenes’ companies whose hardware is all over the place but whose name, in Ethernet circles at least, is less well known. Storage and compute engineers on the other hand are likely more familiar with the Mellanox name, especially in the context of Infiniband switches and network interface cards (NICs). In 2016 Mellanox acquired EZchip, allowing the development of some very capable Ethernet switches and an expansion of the company’s portfolio; to paraphrase Amit Katz (VP, WW Ethernet Switch), Mellanox connects PCI-Express interfaces together by building NICs, cables and switches.

At the Networking Field Day event in February 2018, Mellanox proposed that most of the layer 2 switching protocols offered today to solve load distribution and loop prevention issues are irrelevant and suggested that EVPN/VXLAN was able to do everything the data center needed without having to use protocols like TRILL, Spanning Tree, SPB and so forth. More specifically Mellanox offers a broad range of 10/25/40/50 and 100Gbps Ethernet switches based on their Spectrum ASIC which support VXLAN without requiring packet recirculation (i.e. VXLAN routing is accomplished in a single pass through the ASIC).

I’m not going to repeat everything that’s in the video, because, well, you could just watch the video yourself, but here are some of the highlights I took away about the Mellanox switching products were:

- Support for multiple network operating systems installed via ONIE, including – of course – Cumulus Linux, but also Microsoft SONiC, and Linux with SAI and switchdev. Access to the switch in a disaggregated manner falls within Mellanox’s Open Ethernet initiative

- Mellanox’s own NOS, MLNX-OS (now Mellanox Onyx) supports OpenFlow 1.0 and 1.3.

- All switching features are available without an additional license

- VXLAN routing is supported, both symmetric and asymmetric

- No special license required for features like EVPN VXLAN

- 10, 25, 50, 100Gbps support throughout the switch range. 40 Gbps on at least one model, but then, is anybody really looking at 40Gbps any more?

In case you do want the full story or simply want more detail, here’s the video of Mellanox presenting at Networking Field Day 17:

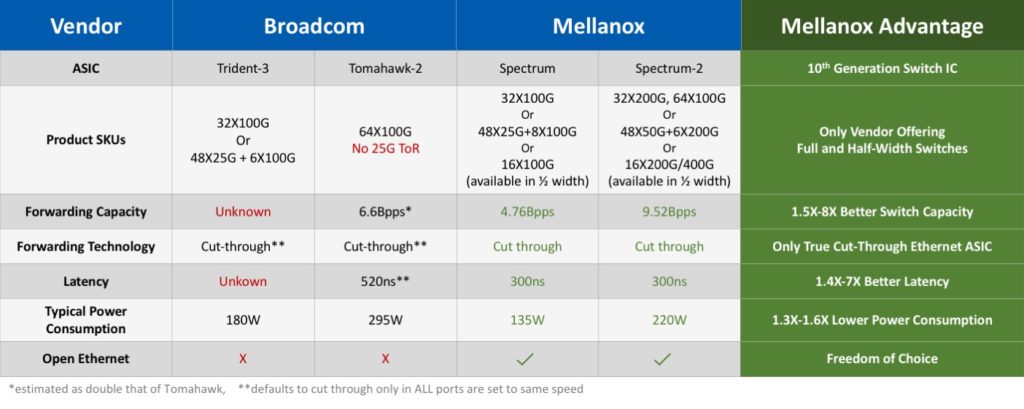

I felt that Mellanox made a pretty convincing case for considering their hardware when looking for a white box vendor, and when looking at some of the performance statistics for the Spectrum / Spectrum 2 ASICs in comparison to Broadcom’s offerings it becomes even more obvious that Mellanox should not be just an ‘also ran’:

For sure, this chart is produced by Mellanox so will undoubtedly focus on areas where the Spectrum thoroughly trounces the Tomahawk and Trident 3, but it’s effective, don’t you think? There is also a Tolly report commissioned by Mellanox which can be downloaded after registering, comparing Mellanox Spectrum and Broadcom Tomahawk based switches in the context of packet loss and low latency.

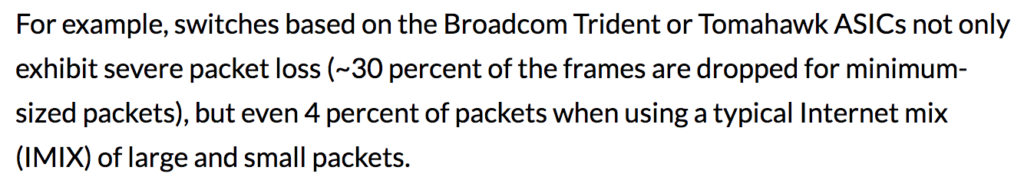

Closely related to this, the quote below is from a Mellanox guest post on Packet Pushers called ‘Rethinking Big Buffers‘; I would be surprised if you don’t spit your coffee out half way through reading it:

This claim is a direct reference to data from the Tolly report. I do not have permission to reproduce content from the report here but if you register to download the report, the exact figures are shown in Table 1, on page 5. Equally curious are the results which show what traffic gets dropped when an output port is oversubscribed. While the Mellanox Spectrum ASIC shows lost frames spread equally across all the contributing input ports, the Broadcom Tomahawk results at times show a very strange and unequal distribution of packet loss, favoring the forwarding of frames from some input ports over others. This is probably not what we, as consumers of the technology, might have assumed would happen.

Conclusions

Mellanox made a pretty strong case to consider its switches when building a white box based Ethernet switching fabric. But what of Ixia and Cumulus? I will address these companies in subsequent posts.

Disclosures

I was an invited delegate to Network Field Day 17 at which all the companies listed above presented. Sponsors pay for presentation time in front of the NFD delegates, and in turn that money funds the delegates’ transport, accommodation and nutritional needs while there. With that said, I always want to make it clear that I don’t get paid anything to be there (I took vacation), and I’m under no obligation to write so much as a single word about any of the presenting companies, let alone write anything nice about them. If I have written about a sponsoring company, you can rest assured that I’m saying what I want to say and I’m writing it because I want to, not because I have to.

You can read more here if you would like.

Leave a Reply